Scientific Computing

Numerical simulation of real-world phenomena provides fertile ground for building interdisciplinary relationships. The SCI Institute has a long tradition of building these relationships in a win-win fashion – a win for the theoretical and algorithmic development of numerical modeling and simulation techniques and a win for the discipline-specific science of interest. High-order and adaptive methods, uncertainty quantification, complexity analysis, and parallelization are just some of the topics being investigated by SCI faculty. These areas of computing are being applied to a wide variety of engineering applications ranging from fluid mechanics and solid mechanics to bioelectricity.

Valerio Pascucci

Scientific Data Management

Chris Johnson

Problem Solving Environments

Ross Whitaker

GPUs

Chuck Hansen

GPUsFunded Research Projects:

Publications in Scientific Computing:

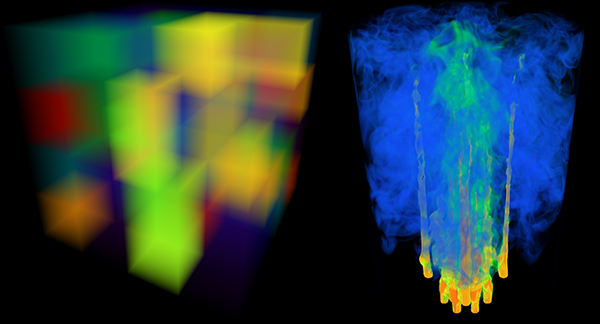

Radiative Heat Transfer Calculation on 16384 GPUs Using a Reverse Monte Carlo Ray Tracing Approach with Adaptive Mesh Refinement A. Humphrey, D. Sunderland, T. Harman, M. Berzins. In 2016 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), pp. 1222-1231. May, 2016. DOI: 10.1109/IPDPSW.2016.93 Modeling thermal radiation is computationally challenging in parallel due to its all-to-all physical and resulting computational connectivity, and is also the dominant mode of heat transfer in practical applications such as next-generation clean coal boilers, being modeled by the Uintah framework. However, a direct all-to-all treatment of radiation is prohibitively expensive on large computers systems whether homogeneous or heterogeneous. DOE Titan and the planned DOE Summit and Sierra machines are examples of current and emerging GPUbased heterogeneous systems where the increased processing capability of GPUs over CPUs exacerbates this problem. These systems require that computational frameworks like Uintah leverage an arbitrary number of on-node GPUs, while simultaneously utilizing thousands of GPUs within a single simulation. We show that radiative heat transfer problems can be made to scale within Uintah on heterogeneous systems through a combination of reverse Monte Carlo ray tracing (RMCRT) techniques combined with AMR, to reduce the amount of global communication. In particular, significant Uintah infrastructure changes, including a novel lock and contention-free, thread-scalable data structure for managing MPI communication requests and improved memory allocation strategies were necessary to achieve excellent strong scaling results to 16384 GPUs on Titan. |

Extending the Uintah Framework through the Petascale Modeling of Detonation in Arrays of High Explosive Devices M. Berzins, J. Beckvermit, T. Harman, A. Bezdjian, A. Humphrey, Q. Meng, J. Schmidt,, C. Wight. In SIAM Journal on Scientific Computing , Vol. 38, No. 5, pp. S101-S122. 2016. DOI: 10.1137/15M1023270 The Uintah framework for solving a broad class of fluid-structure interaction problems uses a layered taskgraph approach that decouples the problem specification as a set of tasks from the adaptove runtime system that executes these tasks. Uintah has been developed by using a problem-driven approach that dates back to its inception. Using this approach it is possible to improve the performance of the problem-independent software components to enable the solution of broad classes of problems as well as the driving problem itself. This process is illustrated by a motivating problem that is the computational modeling of the hazards posed by thousands of explosive devices during a Deflagration to Detonation Transition (DDT) that occurred on Highway 6 in Utah. In order to solve this complex fluid-structure interaction problem at the required scale, algorithmic and data structure improvements were needed in a code that already appeared to work well at scale. These transformations enabled scalable runs for our target problem and provided the capability to model the transition to detonation. The performance improvements achieved are shown and the solution to the target problem provides insight as to why the detonation happened, as well as to a possible remediation strategy. |

Approximating the Generalized Voronoi Diagram of Closely Spaced Objects J. Edwards, E. Daniel, V. Pascucci, C. Bajaj. In Computer Graphics Forum, Vol. 34, No. 2, Wiley-Blackwell, pp. 299-309. May, 2015. DOI: 10.1111/cgf.12561 Generalized Voronoi Diagrams (GVDs) have far-reaching applications in robotics, visualization, graphics, and simulation. However, while the ordinary Voronoi Diagram has mature and efficient algorithms for its computation, the GVD is difficult to compute in general, and in fact, has only approximation algorithms for anything but the simplest of datasets. Our work is focused on developing algorithms to compute the GVD efficiently and with bounded error on the most difficult of datasets -- those with objects that are extremely close to each other. |

Paint and Click: Unified Interactions for Image Boundaries B. Summa, A. A. Gooch, G. Scorzelli, V. Pascucci. In Computer Graphics Forum, Vol. 34, No. 2, Wiley-Blackwell, pp. 385--393. May, 2015. DOI: 10.1111/cgf.12568 Image boundaries are a fundamental component of many interactive digital photography techniques, enabling applications such as segmentation, panoramas, and seamless image composition. Interactions for image boundaries often rely on two complementary but separate approaches: editing via painting or clicking constraints. In this work, we provide a novel, unified approach for interactive editing of pairwise image boundaries that combines the ease of painting with the direct control of constraints. Rather than a sequential coupling, this new formulation allows full use of both interactions simultaneously, giving users unprecedented flexibility for fast boundary editing. To enable this new approach, we provide technical advancements. In particular, we detail a reformulation of image boundaries as a problem of finding cycles, expanding and correcting limitations of the previous work. Our new formulation provides boundary solutions for painted regions with performance on par with state-of-the-art specialized, paint-only techniques. In addition, we provide instantaneous exploration of the boundary solution space with user constraints. Finally, we provide examples of common graphics applications impacted by our new approach. |

Reducing overhead in the Uintah framework to support short-lived tasks on GPU-heterogeneous architectures B. Peterson, H. K. Dasari, A. Humphrey, J.C. Sutherland, T. Saad, M. Berzins. In Proceedings of the 5th International Workshop on Domain-Specific Languages and High-Level Frameworks for High Performance Computing (WOLFHPC'15), ACM, pp. 4:1-4:8. 2015. DOI: 10.1145/2830018.2830023 |

Developing Uintah’s Runtime System For Forthcoming Architectures, Subtitled “Refereed paper presented at the RESPA 15 Workshop at SuperComputing 2015 Austin Texas,” B. Peterson, N. Xiao, J. Holmen, S. Chaganti, A. Pakki, J. Schmidt, D. Sunderland, A. Humphrey, M. Berzins. SCI Institute, 2015. |

Spectral and High Order Methods for Partial Differential Equations, Subtitled “Selected Papers from the ICOSAHOM'14 Conference, June 23-27, 2014, Salt Lake City, UT, USA.,” R.M. Kirby, M. Berzins, J.S. Hesthaven (Editors). In Lecture Notes in Computational Science and Engineering, Springer, 2015. |

Improving Accuracy In Particle Methods Using Null Spaces and Filters C. Gritton, M. Berzins, R. M. Kirby. In Proceedings of the IV International Conference on Particle-Based Methods - Fundamentals and Applications, Barcelona, Spain, Edited by E. Onate and M. Bischoff and D.R.J. Owen and P. Wriggers and T. Zohdi, CIMNE, pp. 202-213. September, 2015. ISBN: 978-84-944244-7-2 While particle-in-cell type methods, such as MPM, have been very successful in providing solutions to many challenging problems there are some important issues that remain to be resolved with regard to their analysis. One such challenge relates to the difference in dimensionality between the particles and the grid points to which they are mapped. There exists a non-trivial null space of the linear operator that maps particles values onto nodal values. In other words, there are non-zero particle values values that when mapped to the nodes are zero there. Given positive mapping weights such null space values are oscillatory in nature. The null space may be viewed as a more general form of the ringing instability identified by Brackbill for PIC methods. It will be shown that it is possible to remove these null-space values from the solution and so to improve the accuracy of PIC methods, using a matrix SVD approach. The expense of doing this is prohibitive for real problems and so a local method is developed for doing this. |

DOE Advanced Scientific Computing Advisory Committee (ASCAC) Report: Exascale Computing Initiative Review D. Reed, M. Berzins, R. Lucas, S. Matsuoka, R. Pennington, V. Sarkar, V. Taylor. Note: DOE Report, 2015. DOI: DOI 10.2172/1222712 |

Data Science: What Is It and How Is It Taught?, H. De Sterck, C.R. Johnson. In SIAM News, SIAM, July, 2015. |

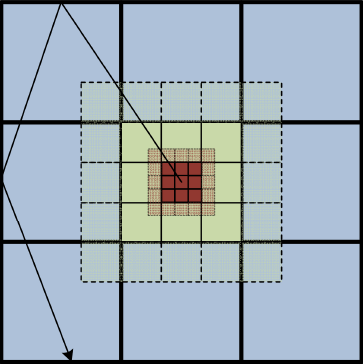

A Scalable Algorithm for Radiative Heat Transfer Using Reverse Monte Carlo Ray Tracing, A. Humphrey, T. Harman, M. Berzins, P. Smith. In High Performance Computing, Lecture Notes in Computer Science, Vol. 9137, Edited by Kunkel, Julian M. and Ludwig, Thomas, Springer International Publishing, pp. 212-230. 2015. ISBN: 978-3-319-20118-4 DOI: 10.1007/978-3-319-20119-1_16 Radiative heat transfer is an important mechanism in a class of challenging engineering and research problems. A direct all-to-all treatment of these problems is prohibitively expensive on large core counts due to pervasive all-to-all MPI communication. The massive heat transfer problem arising from the next generation of clean coal boilers being modeled by the Uintah framework has radiation as a dominant heat transfer mode. Reverse Monte Carlo ray tracing (RMCRT) can be used to solve for the radiative-flux divergence while accounting for the effects of participating media. The ray tracing approach used here replicates the geometry of the boiler on a multi-core node and then uses an all-to-all communication phase to distribute the results globally. The cost of this all-to-all is reduced by using an adaptive mesh approach in which a fine mesh is only used locally, and a coarse mesh is used elsewhere. A model for communication and computation complexity is used to predict performance of this new method. We show this model is consistent with observed results and demonstrate excellent strong scaling to 262K cores on the DOE Titan system on problem sizes that were previously computationally intractable. Keywords: Uintah; Radiation modeling; Parallel; Scalability; Adaptive mesh refinement; Simulation science; Titan |

Computational Determination of the Modified Vortex Shedding Frequency for a Rigid, Truncated, Wall-Mounted Cylinder in Cross Flow A. Faucett, T. Harman, T. Ameel. In Volume 10: Micro- and Nano-Systems Engineering and Packaging, Montreal, ASME International Mechanical Engineering Congress and Exposition (IMECE), International Conference on Computational Science, November, 2014. DOI: 10.1115/imece2014-39064 |

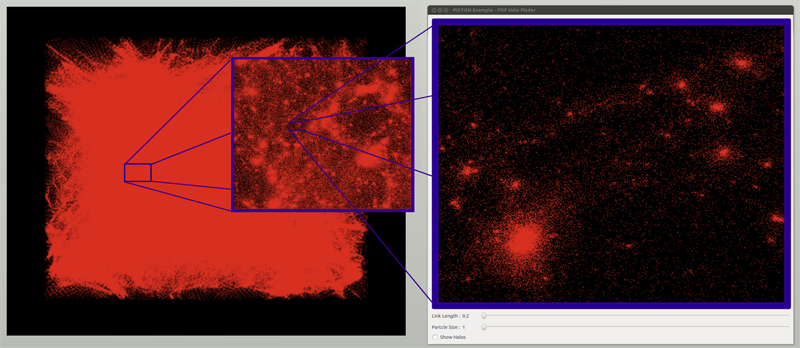

In-situ feature extraction of large scale combustion simulations using segmented merge trees A.G. Landge, V. Pascucci, A. Gyulassy, J.C. Bennett, H. Kolla, J. Chen, P.-T. Bremer. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis (SC 2014), New Orleans, Louisana, IEEE Press, Piscataway, NJ, USA pp. 1020--1031. 2014. ISBN: 978-1-4799-5500-8 DOI: 10.1109/SC.2014.88 The ever increasing amount of data generated by scientific simulations coupled with system I/O constraints are fueling a need for in-situ analysis techniques. Of particular interest are approaches that produce reduced data representations while maintaining the ability to redefine, extract, and study features in a post-process to obtain scientific insights. |