|

|

|

|

|

|

|

|

|

Award Number and Duration |

|

|

|

DOE DE-SC0023157 |

|

|

|

PI and Point of Contact |

|

|

|

Bei Wang Phillips (PI, University of Utah) |

|

|

|

Collaborators |

|

|

|

Tom Peterka (Lead PI) |

|

|

|

Overview |

|

|

|

This project investigates how to accurately and reliably visualize complex data consisting of multiple nonuniform domains and/or data types. Much of the problem stems from having no uniform representation of disparate datasets.

To analyze such multimodal data, users face many choices in converting one modality to another; confounding processing and visualization, even for experts. Recent work in alternative data models and representations that are continuous, high-order (nonlinear), and can be queried anywhere (i.e., implicit), suggests that such models can potentially represent multiple data sources in a consistent way. |

|

|

|

Publications and Manuscripts |

|

|

|

Papers marked with * use alphabetic ordering of authors. |

| Year 3 (2024 - 2025) | |

|

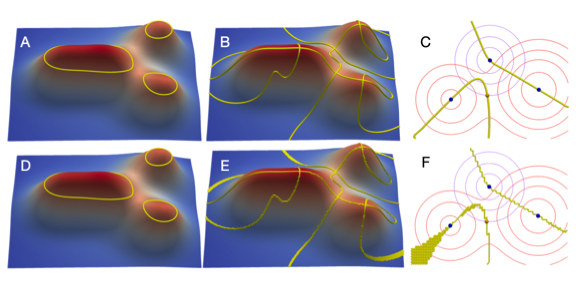

Extracting Complex Topology from Multivariate Functional Approximation: Contours, Jacobi Sets, and Ridge-Valley Graphs.

Guanqun Ma, David Lenz, Thomas Peterka, Hanqi Guo, Bei Wang. Manuscript, 2025. |

|

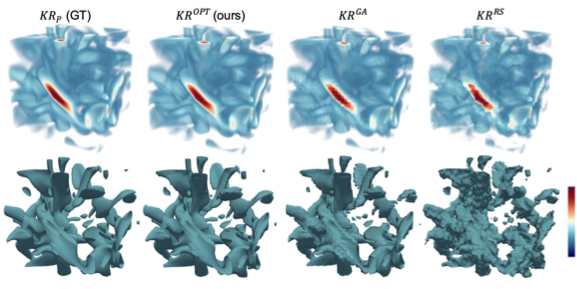

A Topology-Preserving Coreset for Kernel Regression in Scientific Visualization.

Weiran Lyu, Jeff Phillips, Bei Wang. Manuscript, 2025. |

|

Critical Point Extraction from Multivariate Functional Approximation.

Critical Point Extraction from Multivariate Functional Approximation.

Guanqun Ma, David Lenz, Tom Peterka, Hanqi Guo, Bei Wang. IEEE Workshop on Topological Data Analysis and Visualization (TopoInVis) at IEEE VIS, 2024. arXiv:2408.13193 |

|

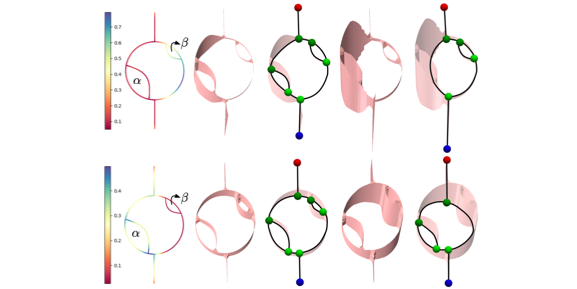

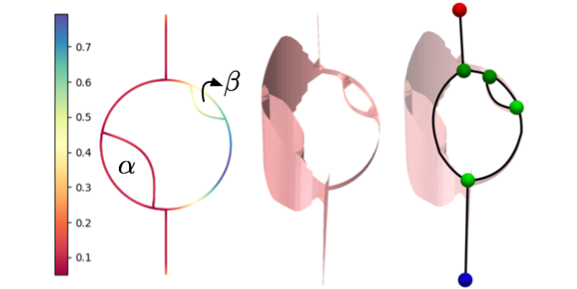

Measure-Theoretic Reeb Graphs and Reeb Spaces.

Measure-Theoretic Reeb Graphs and Reeb Spaces.

Qingsong Wang, Guanqun Ma, Raghavendra Sridharamurthy, Bei Wang. Discrete & Computational Geometry (DCG), minor revision, 2025. arXiv:2401.06748. |

| Year 2 (2023 - 2024) | |

|

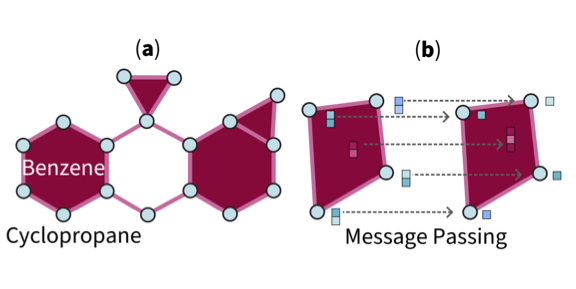

Position: Topological Deep Learning is the New Frontier for Relational Learning.

Position: Topological Deep Learning is the New Frontier for Relational Learning.

Theodore Papamarkou, Tolga Birdal, Michael Bronstein, Gunnar Carlsson, Justin Curry, Yue Gao, Mustafa Hajij, Roland Kwitt, Pietro Lio, Paolo Di Lorenzo, Vasileios Maroulas, Nina Miolane, Farzana Nasrin, Karthikeyan Natesan Ramamurthy, Bastian Rieck, Simone Scardapane, Michael T. Schaub, Petar Velickovic, Bei Wang, Yusu Wang, Guo-Wei Wei, Ghada Zamzmi. Proceedings of the 41st International Conference on Machine Learning (ICML), 2024. arXiv:2402.08871 |

|

Measure-Theoretic Reeb Graphs and Reeb Spaces.

Measure-Theoretic Reeb Graphs and Reeb Spaces.

Qingsong Wang, Guanquan Ma, Raghavendra Sridharamurthy, Bei Wang. International Symposium on Computational Geometry (SOCG), 2024. arXiv:2401.06748. |

| Year 1 (2022 - 2023) | |

|

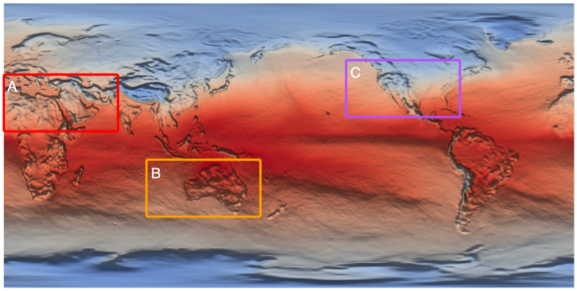

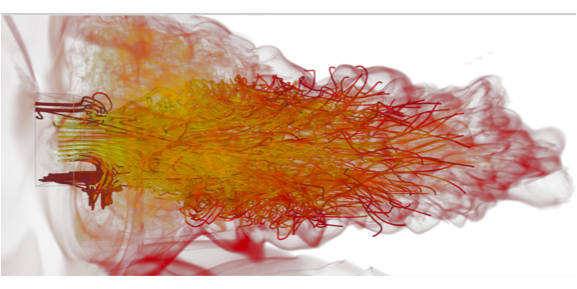

Interactive Visualization of Time-Varying Flow Fields Using Particle Tracing Neural Networks.

Interactive Visualization of Time-Varying Flow Fields Using Particle Tracing Neural Networks.Mengjiao Han, Sudhanshu Sane, Jixian Li, Shubham Gupta, Bei Wang, Steve Petruzza, Chris R. Johnson. IEEE Pacific Visualization Symposium (PacificVis), 2024. Supplementary Material. |

|

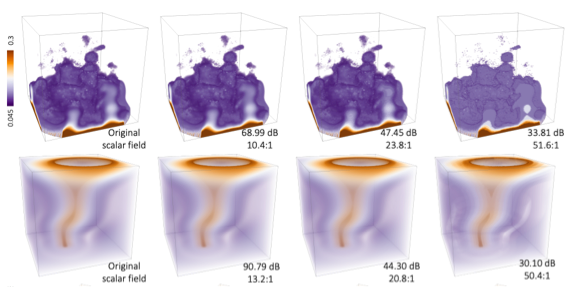

TopoSZ: Preserving Topology in Error-Bounded Lossy Compression.

TopoSZ: Preserving Topology in Error-Bounded Lossy Compression.

Lin Yan, Xin Liang, Hanqi Guo, Bei Wang. IEEE Visualization Conference (IEEE VIS), 2023. IEEE Transactions on Visualization and Computer Graphics (TVCG), 30, pages 1302-1312, 2024. Supplementary Material. DOI:10.1109/TVCG.2023.3326920 arXiv:2304.11768. |

|

|

|

Presentations, Educational Development and Broader Impacts |

|

|

| Year 2 (2023 - 2024) |

|

| Year 1 (2022 - 2023) |

|

|

|

|

Students |

|

|

|

Guanqun Ma (Ph.D., Summer 2023 - present) Kahlert School of Computing and SCI Institute University of Utah Weiran (Nancy) Lyu (Ph.D., Fall 2022 - present) Kahlert School of Computing and SCI Institute University of Utah Former Students Syed Fahim Ahmed (Ph.D., Spring 2023 - Summer 2024)Kahlert School of Computing and SCI Institute University of Utah |

|

|

|

Acknowledgement |

|

|

|

This material is based upon work supported or partially supported by the United States Department of Energy (DOE) under Grant No. DE-SC0023157. |

|

|