Statistical 3D Shape Recovery

Motivation: From a human perspective, our visual system makes use of several cues to infer 3D shapes from 2D images of various objects projected onto the retina. Looking at the world from slightly different positions – providing different views gives an important depth cue known as the disparity cue. Another cue is motion parallax, where images of nearby objects appear to move faster than those of distant objects. Even when there is only a single still image, humans can still extract 3D information because of the availability of shading information, which can provide insights into the shape of the object. The extraction of information from shading primarily depends on understanding the image formation process, defined as the response of an imaging sensor to radiation. To solve for the object’s 3D shape from a single optical image, it has been shown that constraining the shape-form-shading (SFS) algorithms to specific classes of objects can improve the accuracy of the recovered shape. The main motivation behind my work in this area is formulating an imaging model that describes the relationship between an object’s attributes (shape and reflectance properties) and the formed image brightness. In particular, I have been working on shape-from-prior algorithms that incorporate statistical shape and appearance information about the object we are reconstructing.

- Statistical shape-from-shading for Lambertian reflectance

- Occlusion-invariant statistical shape from shading for Lambertian reflectance

- Statistical shape from shading under natural illumination and arbitrary reflectance

- Shape from shading under near illumination and non-Lambertian reflectance

Statistical shape-from-shading for Lambertian reflectance

Joint work with: Aly Farag and Thomas Starr

The shape-from-shading (SFS) problem is an interesting field in computer vision that involves recovering the 3D shape of an object using the cues of lighting and shading. Two tasks need to be accomplished to solve the SFS problem, namely: (a) formulate an imaging model that relates the 3D shape and image brightness and (b) a numerical algorithm to reconstruct the 3D shape from the image.

The appearance of an object under fixed pose depends primarily on the triology of photometric image formation process; the object’s geometrical structure (shape), the surface reflectance properties (material) and illumination, where the shading is given by the image irradiance equation (a nonlinear first-order partial differential equation). Minimization approaches to SFS solve for the solution that minimizes an energy function over the whole image. This energy function involves the brightness constraint, which is derived directly from the image irradiance equation. Several constraints that regularize the solution, such as smoothness, integrability, and intensity gradient constraints, can be added to help in the minimization procedure.

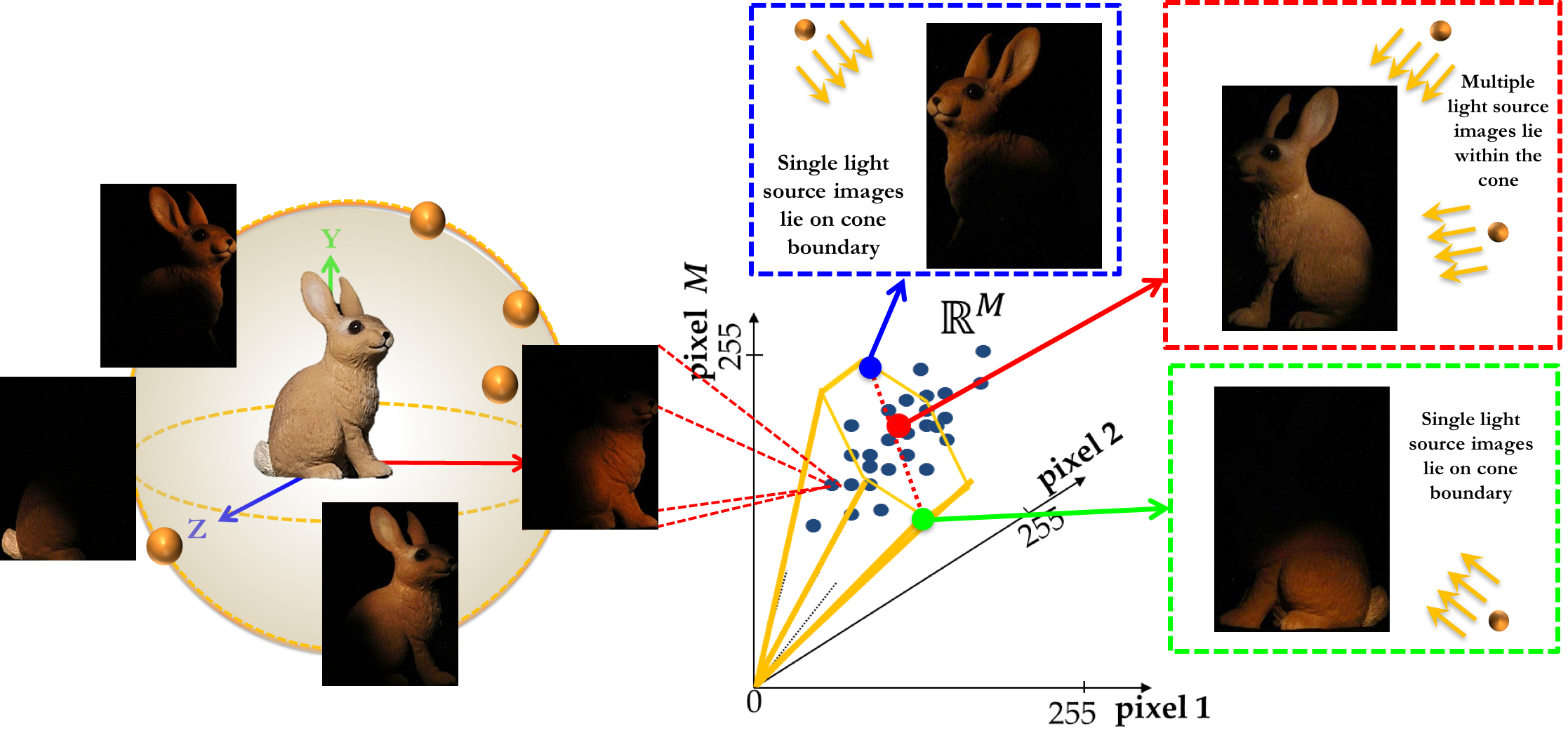

One of the main challenges that confronts SFS algorithms is dealing with arbitrary illumination, whereas most SFS methods assume a single light source from a known direction. However, it has been shown that there is an underlying generative structure to the set of images of a fixed-pose object under variable illumination conditions. While theoretically, the space of all such images is infinite dimensional due to the arbitrariness of the lighting functions, it has been proved that such set of images lie in a convex cone, termed as illumination cone, in the space of images. Due to its convexity, the illumination cone for a given fixed-pose object is characterized by by a finite set of extreme rays defining images of that object under appropriately chosen single distant point light sources. Whereas all other images inside the cone are formed by convex combinations of these extreme rays.

Illumination cone: Images of the same object under fixed pose appear differently due to the change in the lighting conditions. The set of \(M\)-pixel images of any convex Lambertian object seen under all possible lighting conditions, while kept at fixed pose with respect to the imaging sensor, spans a convex cone known-as the illumination cone in \(\mathbb{R}^M\). In this imaging setup, a coated torch (flash light) is used with a very tiny hole to narrow down light rays in order to simulate a single directional light source, modeled as a delta function. The light source and the camera are kept at a large distance compared to the object’s size, where viewer-centered coordinate system is used, to simulate distant illumination and far away viewer where orthographic projection can be assumed.

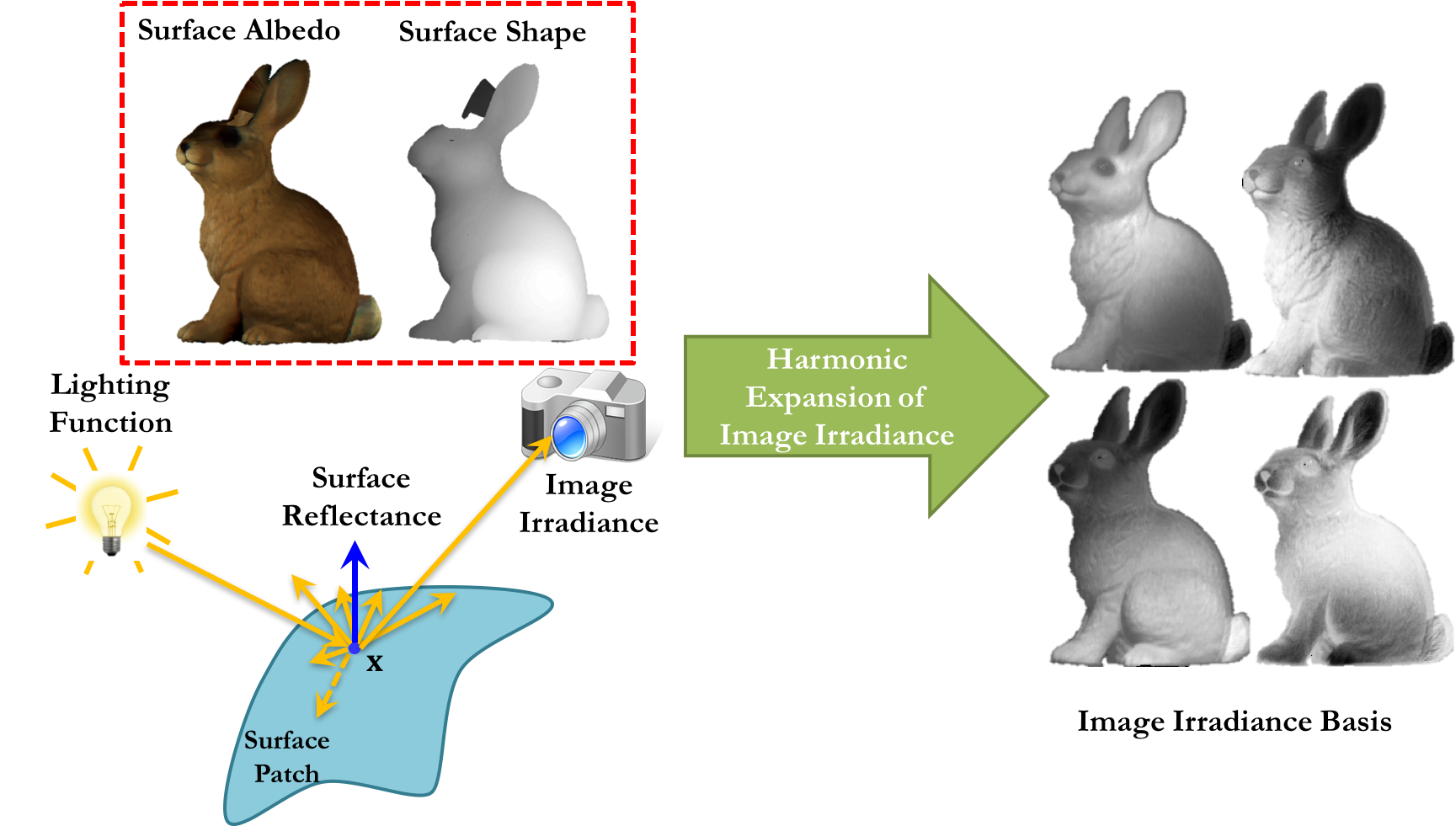

Assuming certain surface reflectance distribution function, the harmonic expansion of the image irradiance equation can be used to derive an analytic subspace to approximate images under fixed pose but different illumination conditions. This approach does not involve complicated acquisition setup other than the availability of groundtruth shape (which can be obtained by Cyberware scanners or shape-from-X approaches) and reflectance (which can be obtained from publicly available reflectance databases).

The linear subspace that represent all possible images of a specific object under all illumination conditions, while pose is kept fixed, can be constructed by assuming certain surface reflectance distribution function, the harmonic expansion of the lighting function can be used to derive an analytic subspace to approximate images under fixed pose but different illumination conditions.

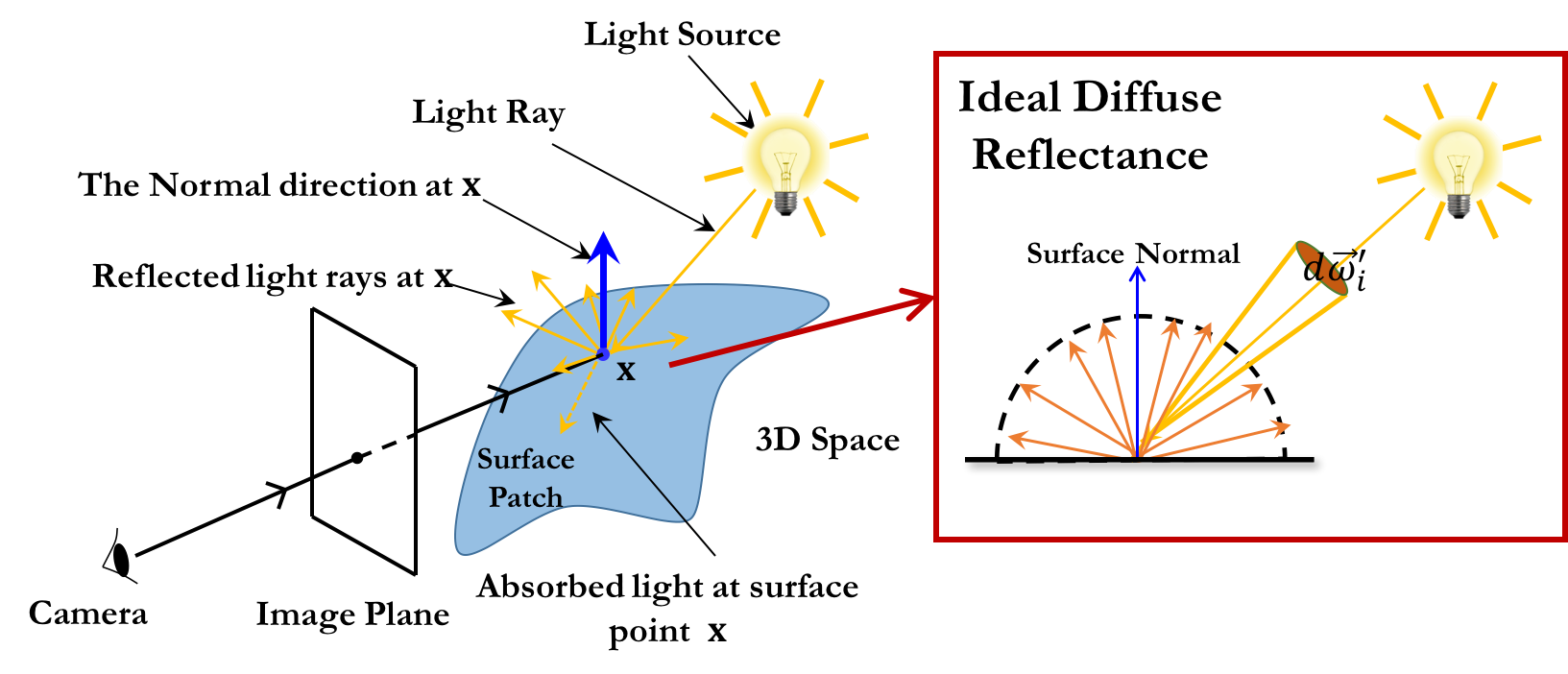

The Lambertian model is the simplest and most widely used among the imaging models. It assumes that each surface point appears equally bright from all viewing directions. Formally, with the Lambertian model, the image intensity at a surface point is determined by taking the dot product between the surface normal and the lighting direction scaled by the surface albedo (i.e. reflectance).

Left: A simplified image formation model. When a light ray hits the surface of an object in space, a portion of light energy is absorbed by the object’s surface and the remaining is reflected from the surface in different directions. The reflected light rays in the direction of the camera affect the brightness of the projected point in the image plane. Right: Diffuse/Lambertian reflectance, the reflectance function is independent of outgoing direction. Hence the radiance leaving the surface is angle-independent. Thus a Lambertian surface will look equally bright from any direction.

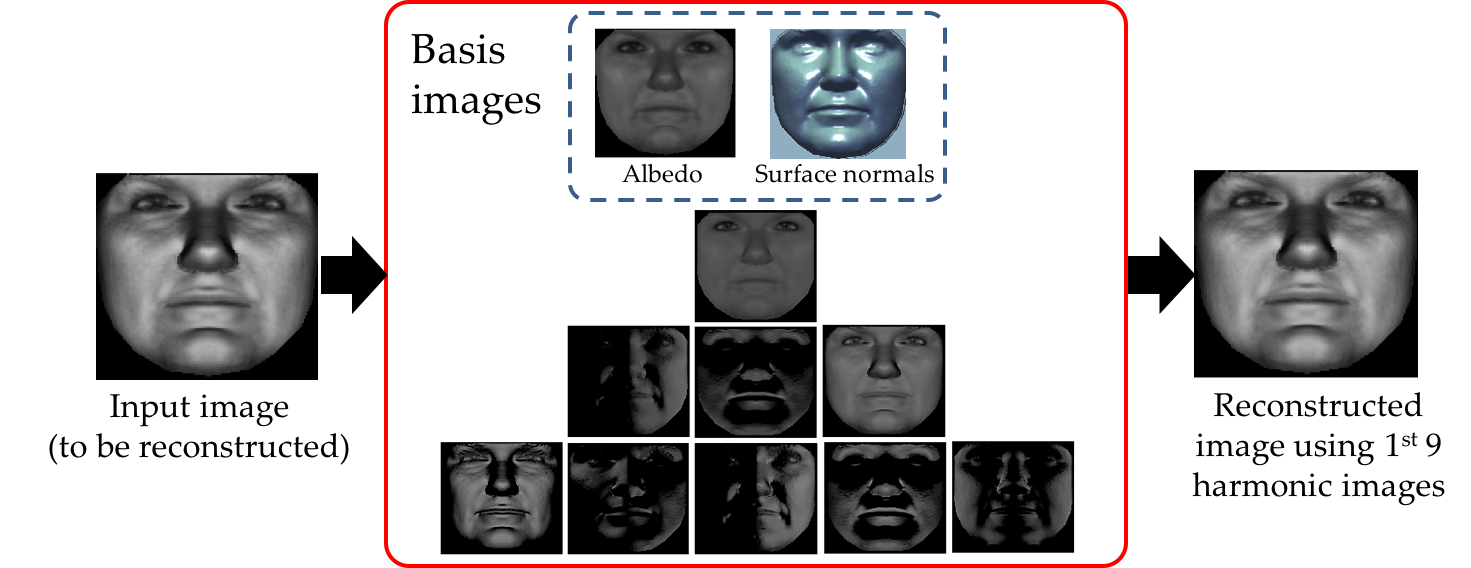

In this regard, we proposed a coupled statistical model by combining shape (2D and height maps), appearance (albedo), and spherical harmonics projection (SHP) information to parameterize facial surfaces under arbitrary illumination. The facial reconstruction framework used a 2D frontal face image under arbitrary illumination as input and output the estimated 3D shape and appearance. The shape and albedo coefficients along with the actual shape/texture reconstructions were further used as discriminatory features for face indentification purposes.

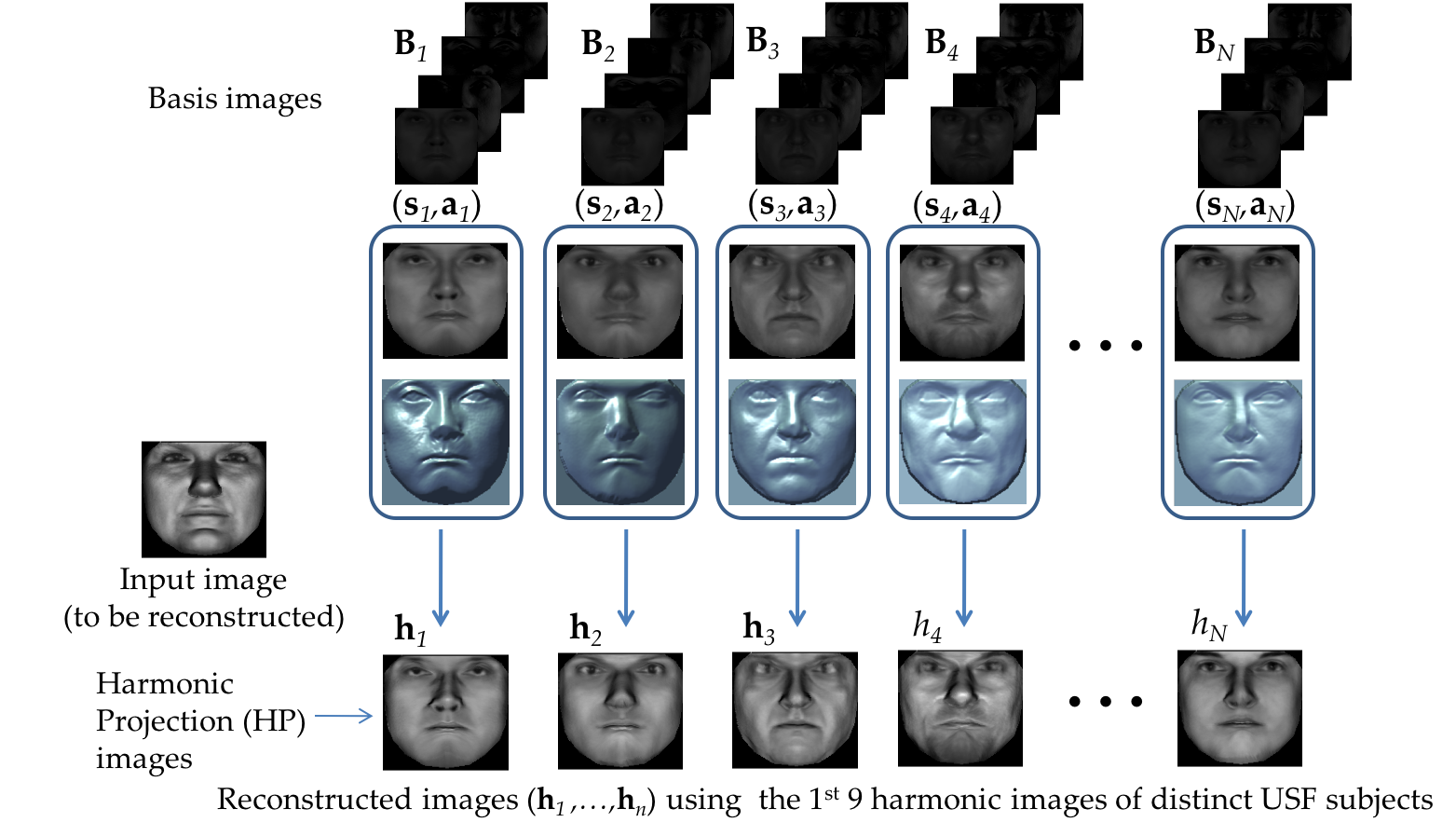

Illustration for harmonic-based image reconstruction, the input image is reconstructed by projecting it first to the 1st nine spherical harmonics basis images and then taking the reverse process by summing the scaled basis images. The reconstructed image (now called SHP image) is visually similar to the input image.

Coupled statistical model illustration. A coupled statistical model can be constructed from this figure, which links coefficients of the intensity, shape and spherical harmonics projection (SHP) images of faces of various subjects. Note that Active Appearance Modeling (AAM) is used to automatically localize facial landmarks to guide the process of aligning the input image to the aligned albedo samples of the USF database using thin-plate-splines warping.

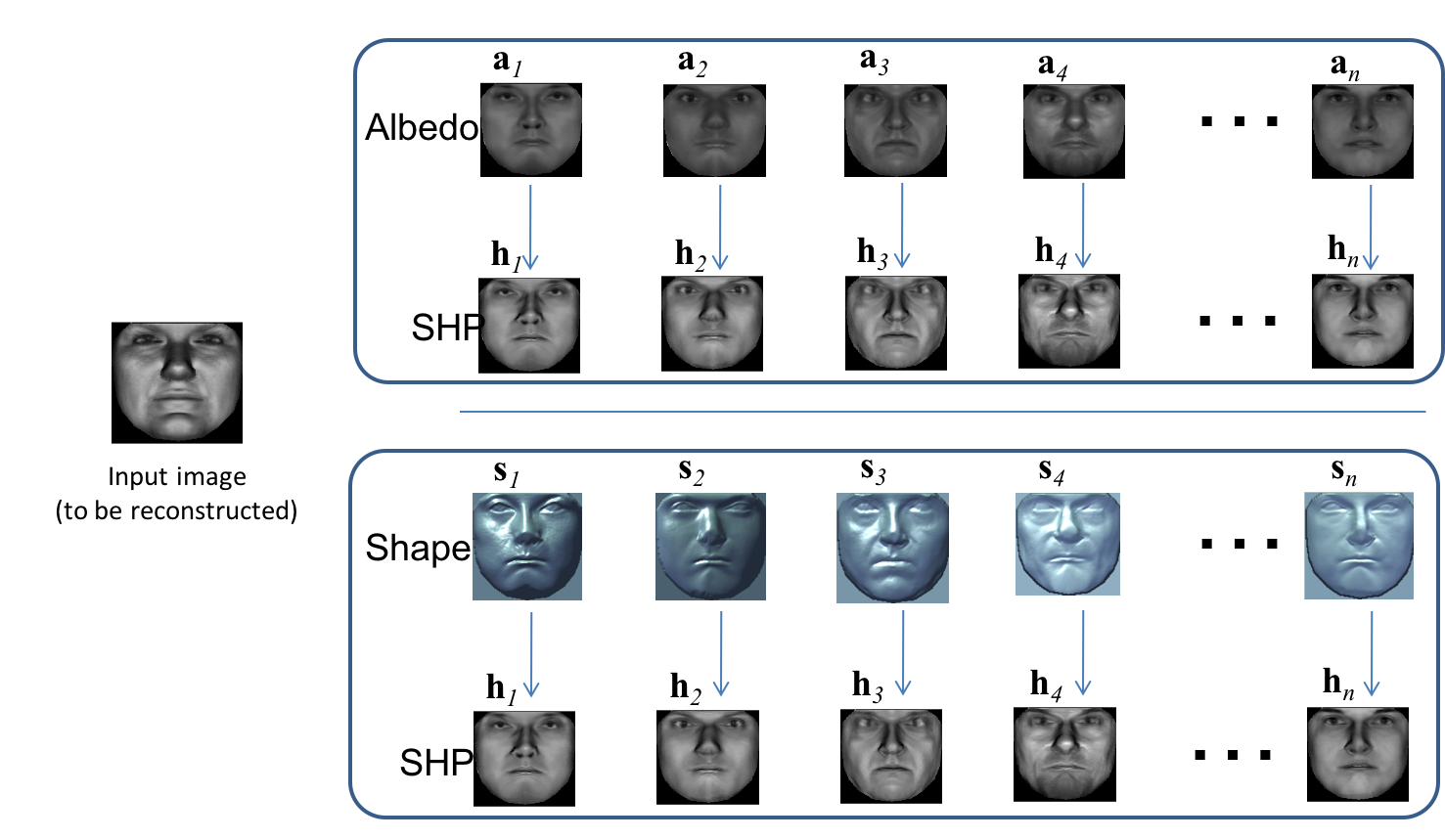

We further extended this work to include 2D shape information in the model. We also decoupled this coupled model to obtain a separate model for shape and albedo where the classic brightness constraint in SFS is approximated using spherical harmonics projection.

The coupled model can be decomposed into two parts, which can then be cast as a regression framework. There are two regression models at work here, namely: (a) SHP-to-shape and (b) SHP-to-texture.

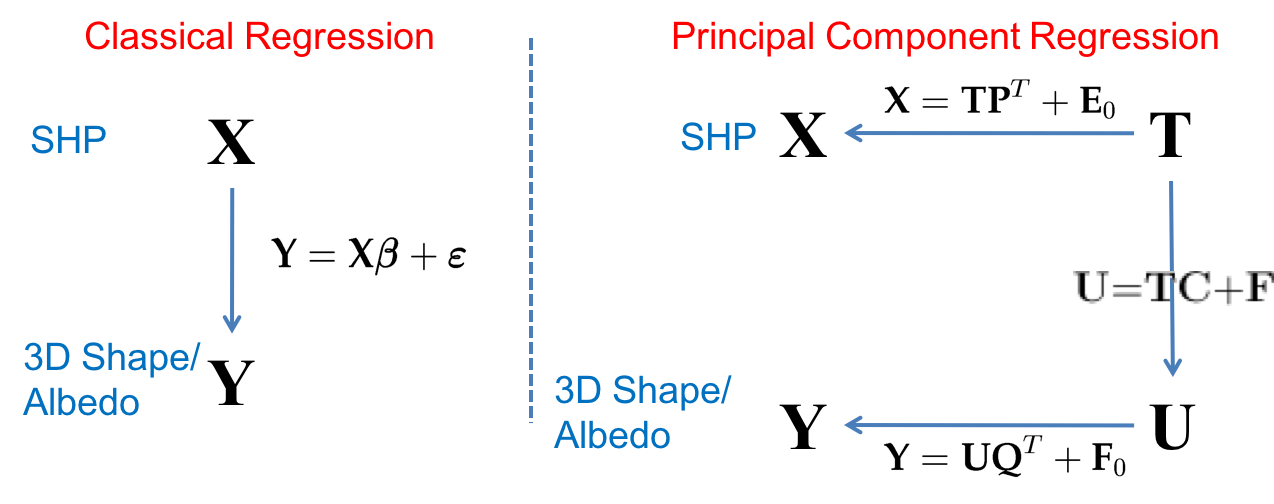

To speed up the process, we cast our models in a regression framework. Nonetheless, multivariate multiple linear regression (MLR) cannot be applied directly to fit a model between the original data matrices due to their high-dimensional nature. To this end, Principal Component Regression (PCR) was utilized to learn the relationship between spherical harmonics projection (SHP) images, and shape and texture independently. PCR-based estimation used matrix operations for shape reconstruction and provided a computationally efficient alternative to the iterative reconstruction methods.

Left: Multivariate multiple linear regression: A model (\(\mathbf{Y} = \mathbf{X}\beta + \epsilon\)) is directly fitted between the independent data \(\mathbf{X}\) and dependent data \(\mathbf{Y}\). Right: Principal component regression: Instead of directly fitting between \(\mathbf{X}\) and \(\mathbf{Y}\), they are first transformed to a low-dimensional subspace, forming \(\mathbf{T}\) and \(\mathbf{U}\), e.g. \(\mathbf{X} = \mathbf{TP}^T + \mathbf{E}_0\), \(\mathbf{Y} = \mathbf{UQ}^T + \mathbf{F}_0\), where \(\mathbf{P}\) and \(\mathbf{Q}\) are eigenvectors. Actual multiple linear regression (\(\mathbf{U} = \mathbf{TC} + \mathbf{F}\)) is done between \(\mathbf{T}\) and \(\mathbf{U}\).

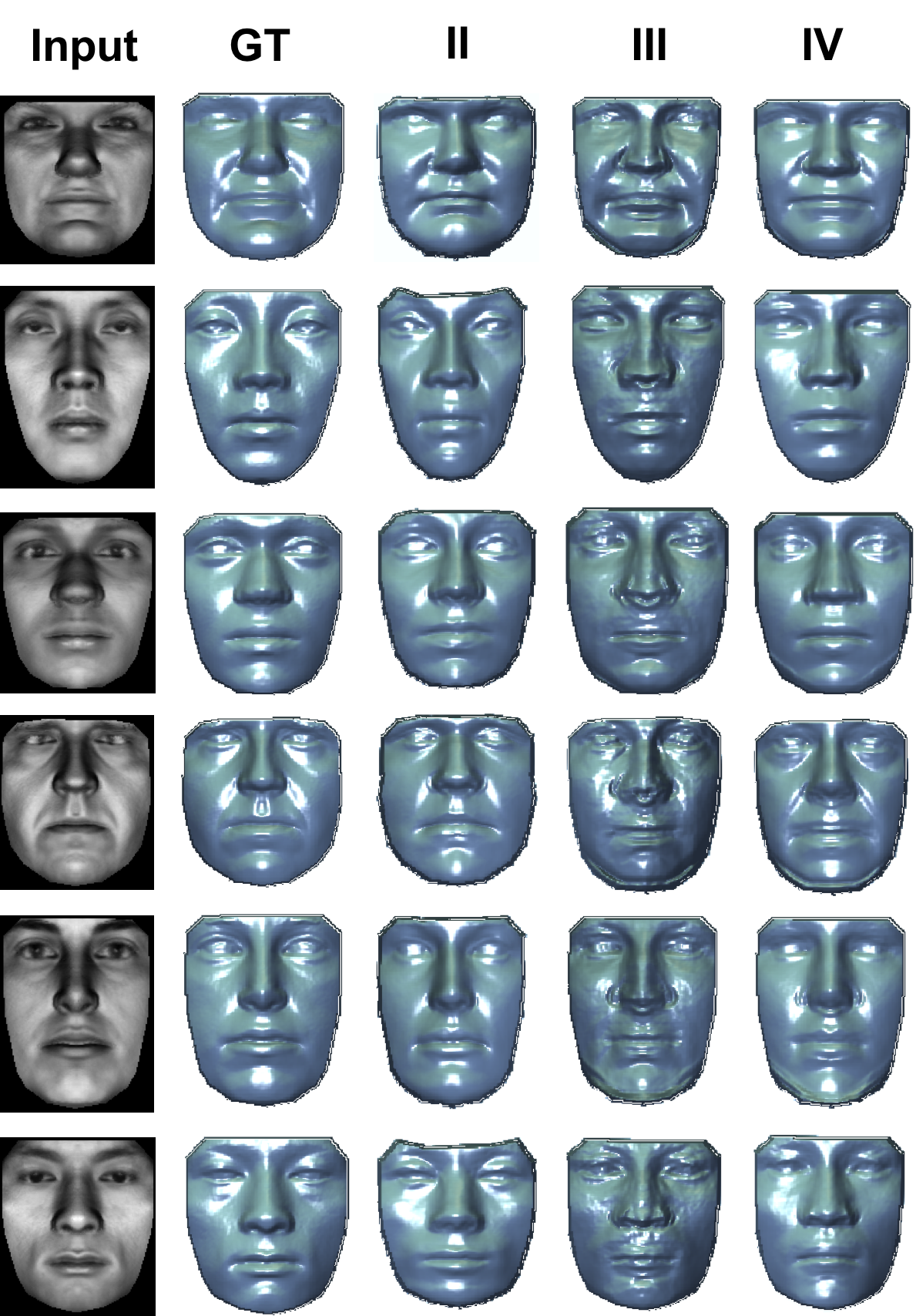

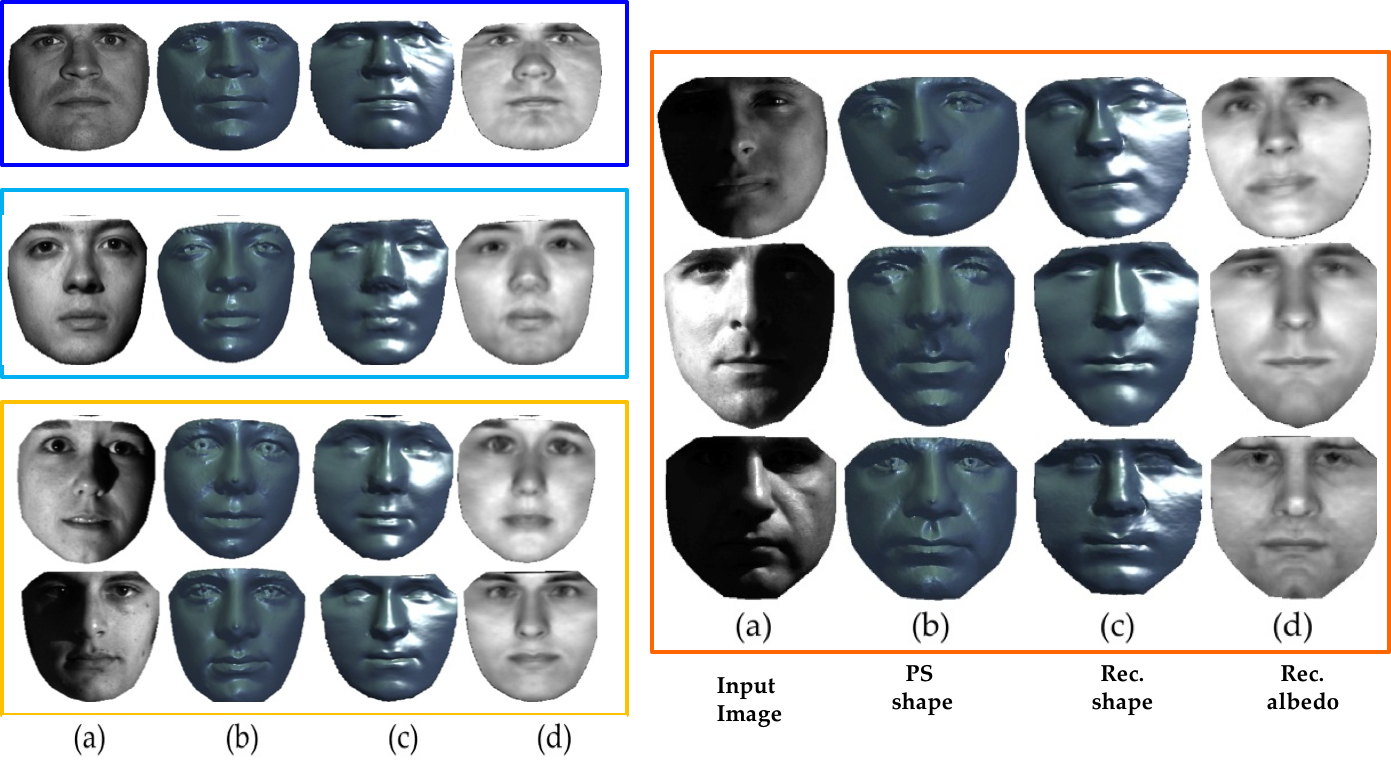

Recovered shapes, together with the input image and ground-truth (GT) shape, for the three model-based methods. Methods III and IV refer to Castelan et al. [1] [2] and Ahmed et al. [3], respectively. The results between Methods II and IV are close visually. Castelan’s version (Method III) suffers with input images that have illumination.

Recovered albedo, together with the input image and ground-truth (GT) albedo, for the three model-based methods. Methods III and IV refer to Castelan et al. [1,2] and Ahmed et al. [3], respectively. The results between Methods II and IV are close visually. Castelan’s version (Method III) suffers with input images that have illumination.

[1] M. Castelan, W. A. P. Smith, and E. R. Hancock. A coupled

statistical model for face shape recovery from brightness images. IEEE

Transactions on Image Processing, 16(4):1139–1151, 2007.

[2] M. Castelan and J. V. Horebeek. 3d face shape approximation from

intensities using partial least squares. Computer Vision and Pattern

Recognition Workshop, 0:1–8, 2008.

[3] A. Ahmed and A. Farag. A new statistical model combining shape and

spherical harmonics illumination for face reconstruction. In Proceedings

of the 3rd international conference on Advances in visual computing -

Volume Part I, ISVC’07, pages 531–541, Berlin, Heidelberg, 2007.

Springer-Verlag.

Related publications:

Ham Rara, Shireen Y. Elhabian, Thomas Starr, Aly Farag. 3D Face Recovery from Intensities of General and Unknown Lightning Using Partial Least Squares. Proc. of 2010 IEEE International Conference on Image Processing (ICIP), pp. 4041-4044, 2010

Ham Rara, Shireen Y. Elhabian, Thomas Starr, Aly Farag. Model-Based Shape Recovery from Single Images of General and Unknown Lighting. IEEE International Conference on Image Processing (ICIP), Nov. 7 - Nov. 10, 2009, pp. 517-520.

Ham Rara, Shireen Y. Elhabian, Thomas Starr, Aly Farag. Face Reconstruction and Recognition Using a Statistical Model Combining Shape and Spherical Harmonics. IEEE SOUTHEASTCON, March 2009, pp. 350-355.

Ham Rara, Shireen Y. Elhabian, Thomas Starr, Aly Farag. A Statistical Model Combining Shape and Spherical Harmonics for Face Reconstruction and Recognition. Biomedical Engineering Conference, CIBEC2008. Cairo International, 1:1-4, 18-20 Dec. 2008.

Occlusion-invariant statistical shape from shading for Lambertian reflectance

Joint work with: Aly Farag

Another evident challenge to SFS approaches is occlusion. The image formation process itself (e.g., perspective projection, shadows due to illumination conditions) generates an occlusion that appears as an intensity discontinuity in the observed image. Another source of occlusion is wearing apparel such as sunglasses, hats, and eyeglasses. Even in the absence of an occluding object, the violation of the assumptions of an SFS statistical model can also be considered as occlusion. Hence, robustness against occlusion is a crucial issue for shape recovery based on a single image.

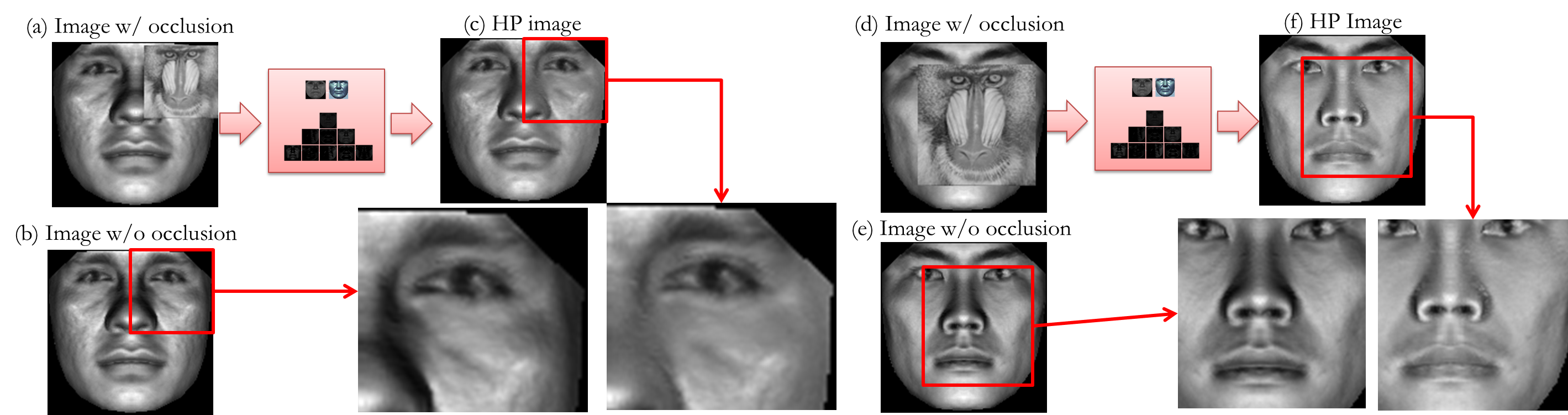

Two samples of input images (b) and (e) illuminated using combined light source at (0,0,1) and (0,0.5,0.9) are corrupted by 25% (a) and 50% (d) contiguous occlusion, Spherical harmonics projection (SHP) was used to reconstruct the input image from the basis images of the same subject (c) and (f), it can be observed that the input image was not correctly recovered due to the presence of occluding region which was taken into consideration when constructing the linear system of equation to perform the harmonics projections.

In this regard, we extended our statistical SFS framework to handle contiguous occlusion where sparse portion of the image is being corrupted. We did not assume any prior knowledge of the color, shape, size, or number of occluding pixels. We were mainly concerned with the computation of the spherical harmonics projection images to handle partially occluded facial images. Occlusions were viewed as either (1) statistical outliers to an assumed model, or (2) local spatial erroneous continuous regions. Casting occlusions as statistical outliers allowed us to use robust statistical techniques to solve the problem of estimating parameters that best fits a given model to a set of data measurements in conditions where the data differ statistically from the model assumption. On the other hand, assuming that the corrupted pixels are likely to be adjacent to each other in the observed image, we can model their spatial interaction using Markov random fields with the homogenous isotropic Potts model using an asymmetric Gibbs potential function. An asymmetric Potts model was adopted to guarantee that the Gibbs energy function is submodular. Hence, it can be minimized using a standard graph cuts approach in a polynomial time.

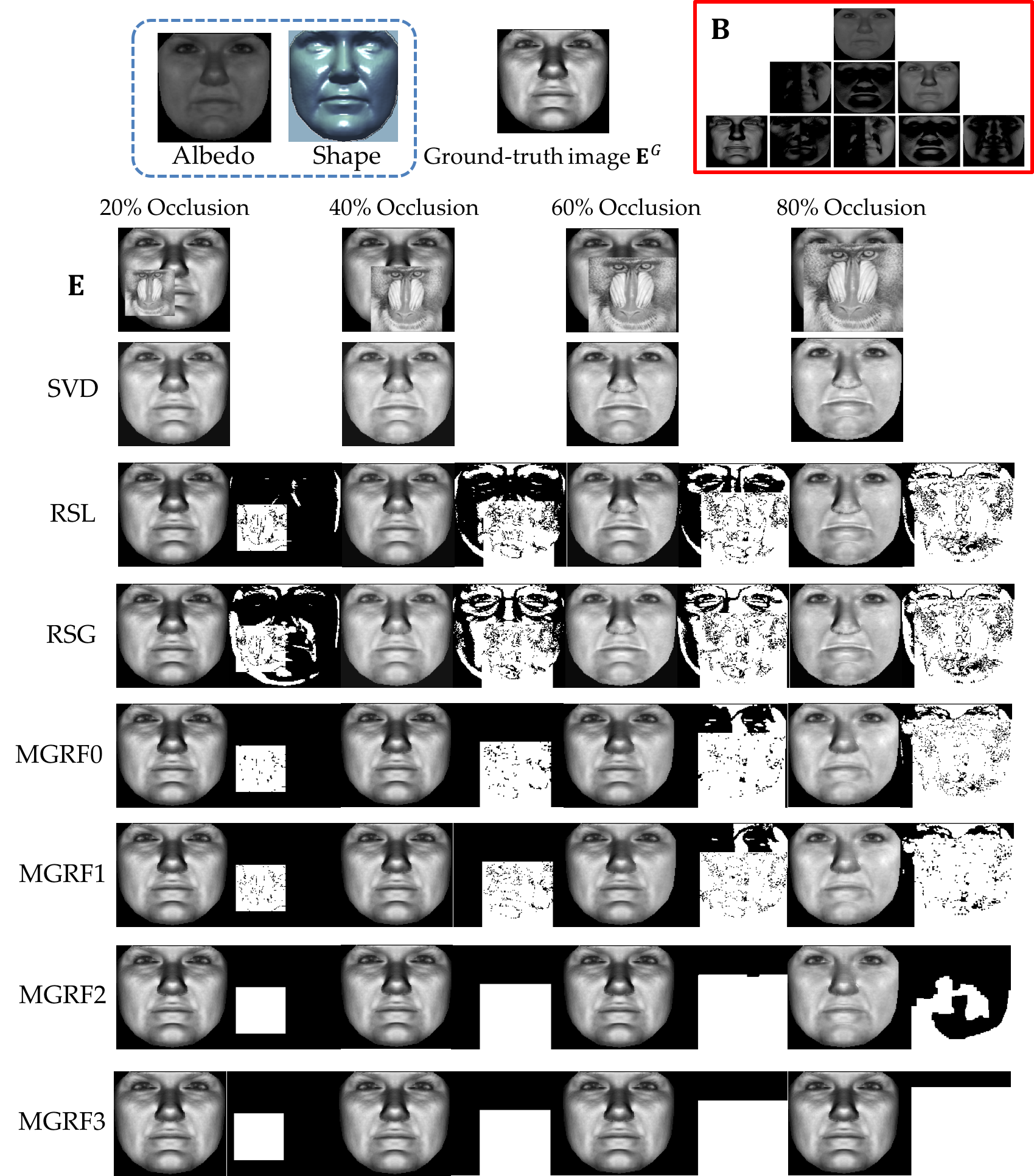

Sample results of SHP images reconstruction with different levels of occlusions. The estimated occlusion spatial support of each algorithm is shown in the even-ordered columns. MGRF3 provides the best results in terms of the reconstructed SHP image and the estimated occlusion spatial support. Note that such spatial support does not exist for the SVD algorithm. Note: We use the following codes; SVD: baseline projection method, RSL: robust projection with Lorenztian function, RSG: robust projection with Geman-McClure function, MGRF0: Markov Gibbs random field with predefined Gibbs potential, (5) MGRF1: same as MGRF0 but with automatic estimation of the Gibbs potential parameter, MGRF2: same as MGRF1 with mixture-of-Gaussian likelihood function, MGRF3: same as MGRF2 with adaptive error threshold.

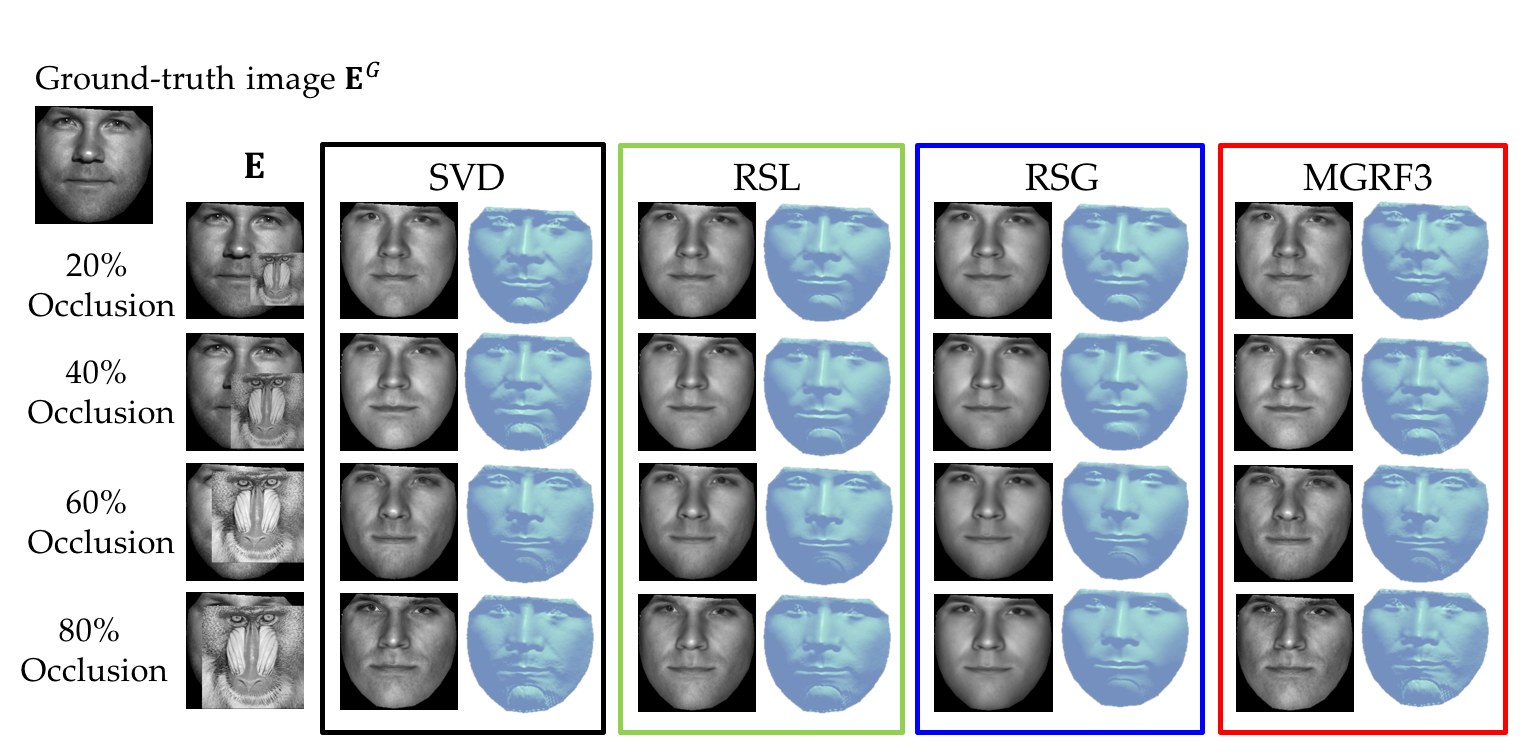

Shape and albedo recovery results for one out-of-training sample of Yale Database, where baboon image is used as the occluder.

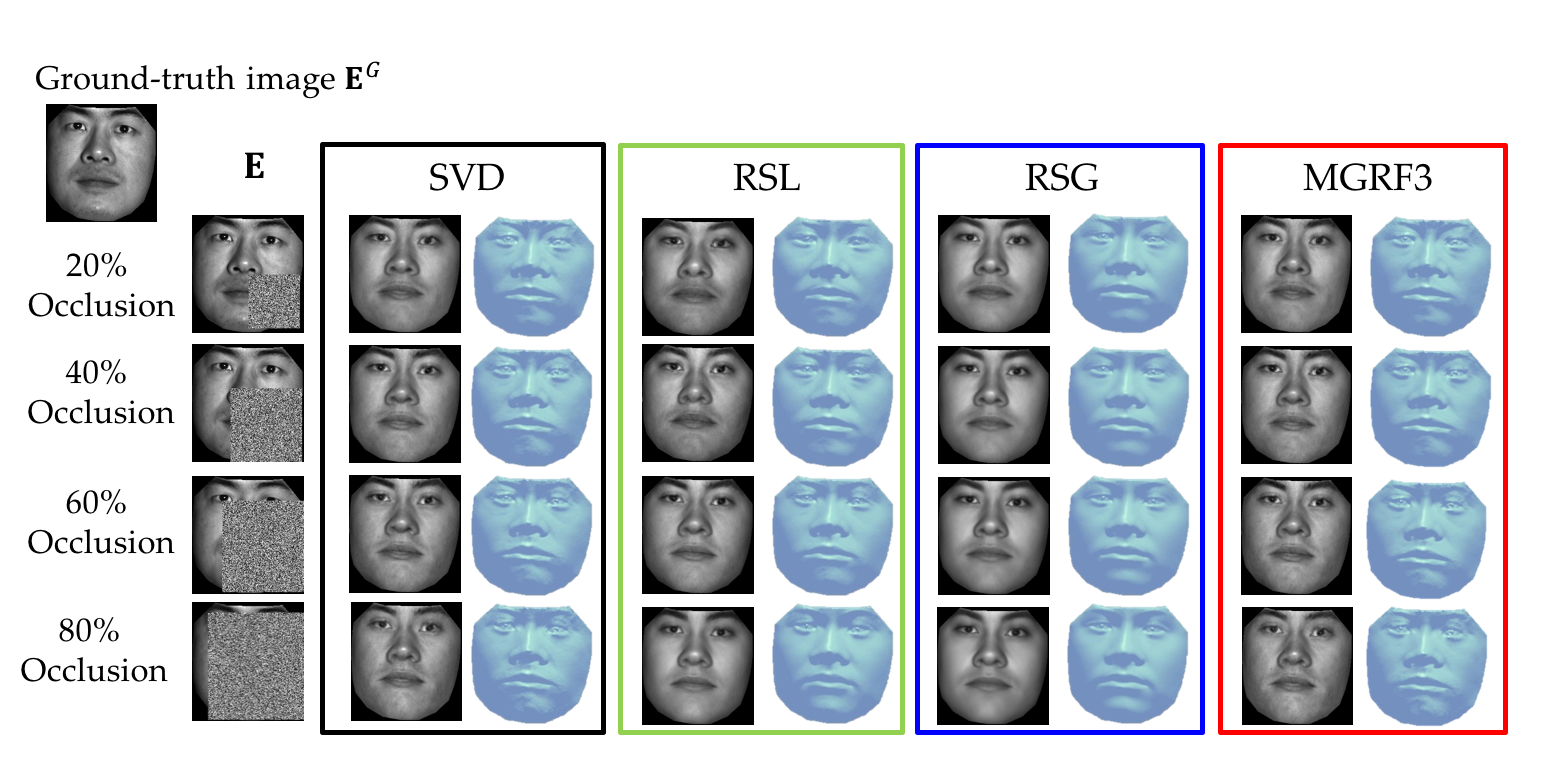

Shape and albedo recovery results for one out-of-training sample of Yale Database, where random occluder is used.

On the medical side, we adapted our statistical SFS to restore missing occlusal surface of a broken tooth crown (inlay/onlay) or even the crown of a whole missing tooth. In particular, we presented a dental affordable, flexible, automatic and accurate system for for tooth restoration where user intervention was kept at the minimal. The inherit relation between the photometric information and the underlying 3D shape was formulated as a coupled statistical model where the effect of illumination was modeled using Spherical Harmonics. Moreover, shape and texture alignment were accomplished using a proposed definition of anatomical jaw landmarks which were automatically detected.

Block diagram of the proposed model-based human teeth restoration: (a) An aligned ensemble of the shapes and textures (oral cavity images) of human jaws is used to build the 3D shape model. (b) Given the texture and surface normals (defining the shape) of a certain jaw in the ensemble, harmonic basis images are constructed. Given an input oral cavity image under general unknown illumination and a set of human jaw anatomical landmark points: (c) Dense correspondence is established between the input image and each jaw sample in the ensemble. (d) The input image is then projected onto the subspace spanned by the harmonic basis of each sample in the ensemble, this encodes the illumination conditions of the input image. Such images are then used to construct an SHP model of the input image. (e) The inherit relation between the SHP images and the corresponding shape and texture is coupled into a statistical model where (f) the shape is recovered within a gradient-descent approach initialized by the sample mean.

The system showed ability to handle different missing and broken parts, crown, inlay and onlay with comparable accuracy to the existing methods without manual intervention from a single image. The restoration surfaces matched smoothly well with adjacent tooth and the morphological character of the tooth was retained.

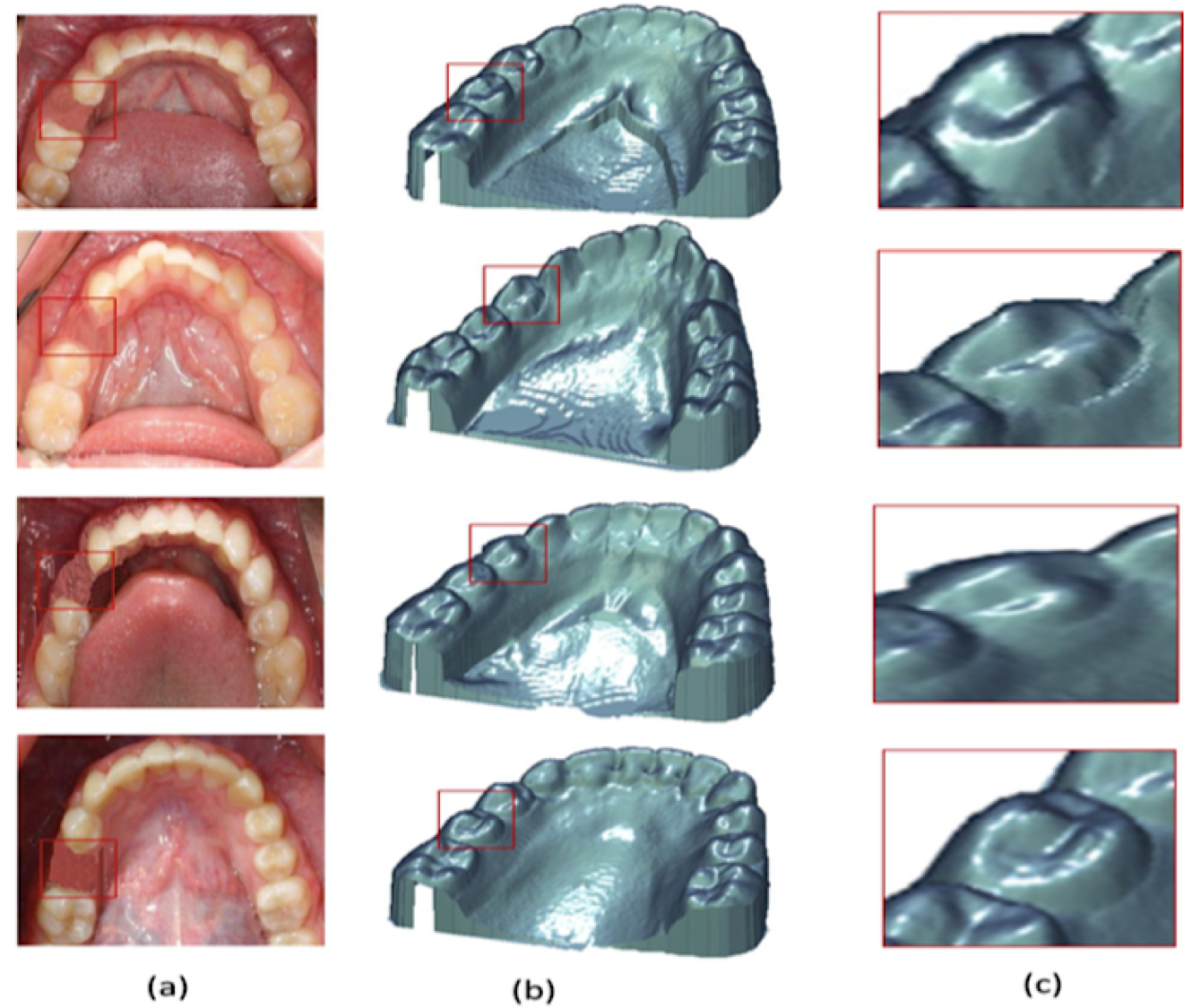

Sample of results of crown restoration: (a) The input image. (b) Reconstructed jaw with the restoration part (c) Zoom on the restoration part

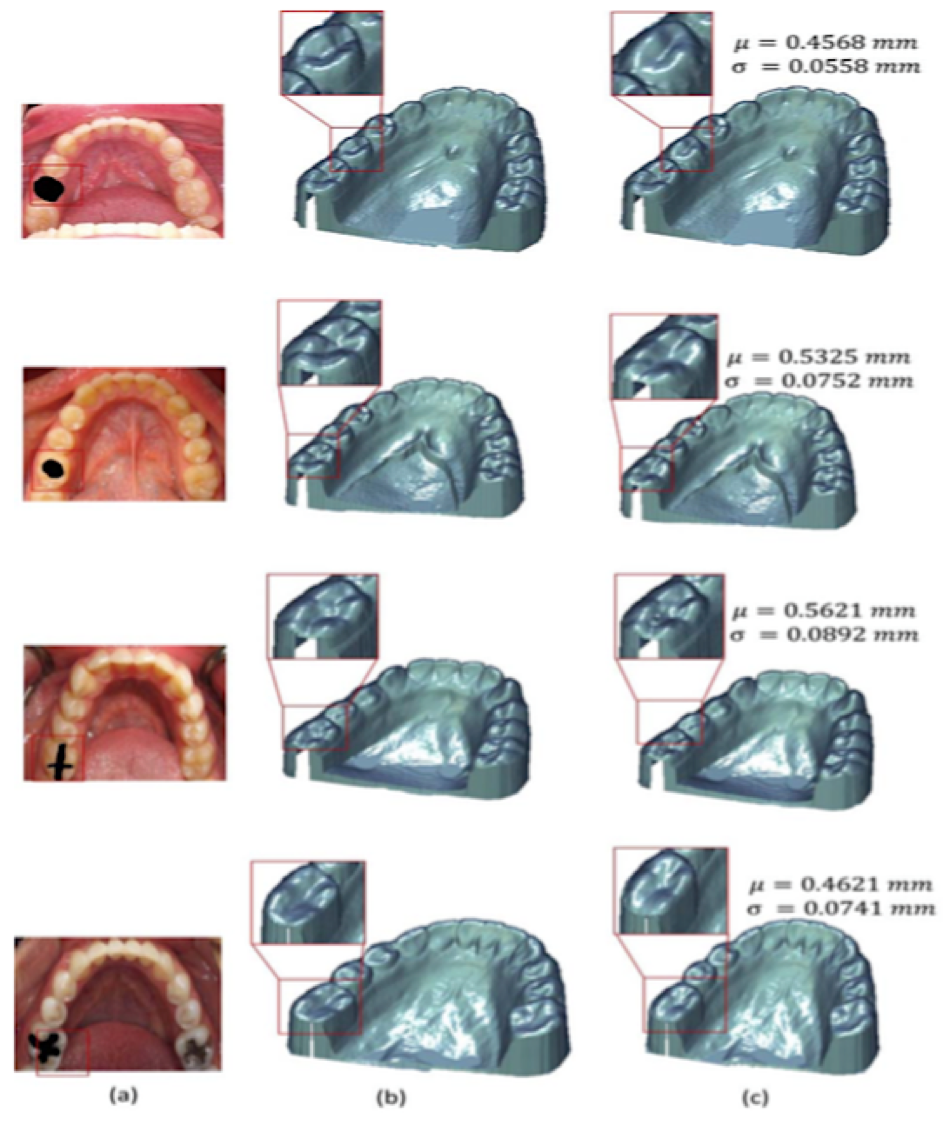

Sample of results of filling restoration: (a) Images of jaws that manually distorted to mimic the missing parts (b) Ground truth of the human jaw using CT (c) Human jaw with the restoration part, marked with the red box with the mean and standard deviation of the RMS error.

Related publications:

Shireen Y. Elhabian, Ham Rara, Asem Ali, Aly Farag. Illumination-invariant Statistical Shape Recovery with Contiguous Occlusion. Eighth Canadian Conference on Computer and Robot Vision (CRV), pp. 301-308, 2011.

Aly Farag, Shireen Y. Elhabian, Aly Abdelrahim, Wael Aboelmaaty, Allan Farman and David Tazman. Model-based Human Teeth Shape Recovery from a Single Optical Image with Unknown Illumination. In Medical Computer Vision. Recognition Techniques and Applications in Medical Imaging, pp. 263-272. Springer Berlin Heidelberg, 2013.

Eslam Mostafa, Shireen Y. Elhabian, Aly Abdelrahim, Salwa Elshazly and Aly Farag. Statistical morphable model for human teeth restoration. In IEEE International Conference on Image Processing (ICIP), pp. 4285-4288, 2014.

Statistical shape from shading under natural illumination and arbitrary reflectance

Joint work with: Aly Farag, David Tasman, and Allan Farman

Typically, an ideal solution to the image irradiance (appearance) modeling problem should be able to incorporate complex illumination, cast shadows as well as realistic surface reflectance properties, while moving away from the simplifying assumptions of Lambertian reflectance and single-source distant illumination. By handling arbitrary complex illumination and non-Lambertian reflectance, the appearance models and the associated statistical shape recovery algorithms that we proposed moved the state-of-the-art closer to the ideal solution.

For vision-related applications, we proposed a framework to recover 3D facial shape from a single image of unknown general illumination, while relaxing the non-realistic assumption of Lambertian reflectance. Prior shape, albedo and reflectance models from real data, which are metric in nature, were incorporated into the shape recovery framework. Adopting a frequency-space based representation of the image irradiance equation, an appearance model was proposed, termed as Harmonic Projection Images, which accounted explicitly for different human skin types as well as complex illumination conditions. Assuming skin reflectance obeys Torrance-Sparrow model, we proved analytically that it can be represented by at most 5th order harmonic basis whose closed form is provided. The proposed recovery framework was a non-iterative approach that incorporated regression-like algorithm in the minimization process. The experiments on synthetic and real images illustrated the robustness of the proposed appearance model vis-a-vis illumination variation.

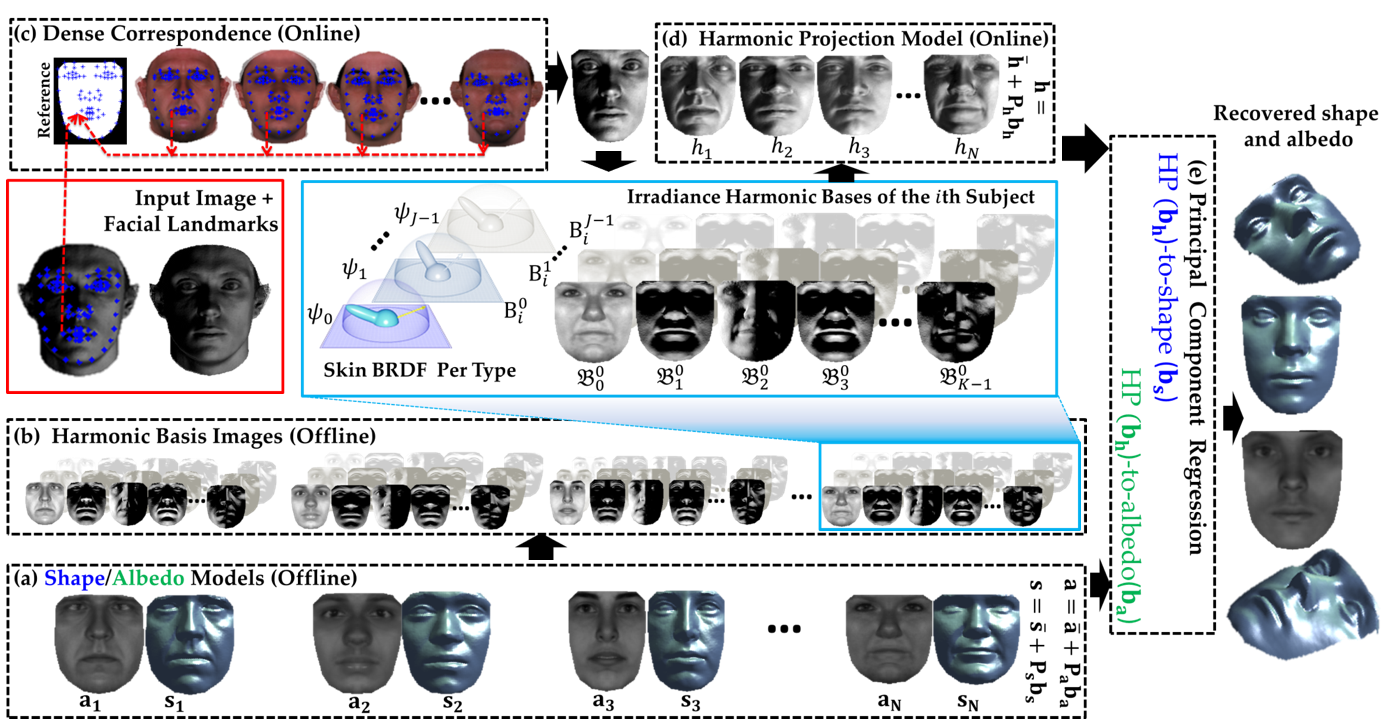

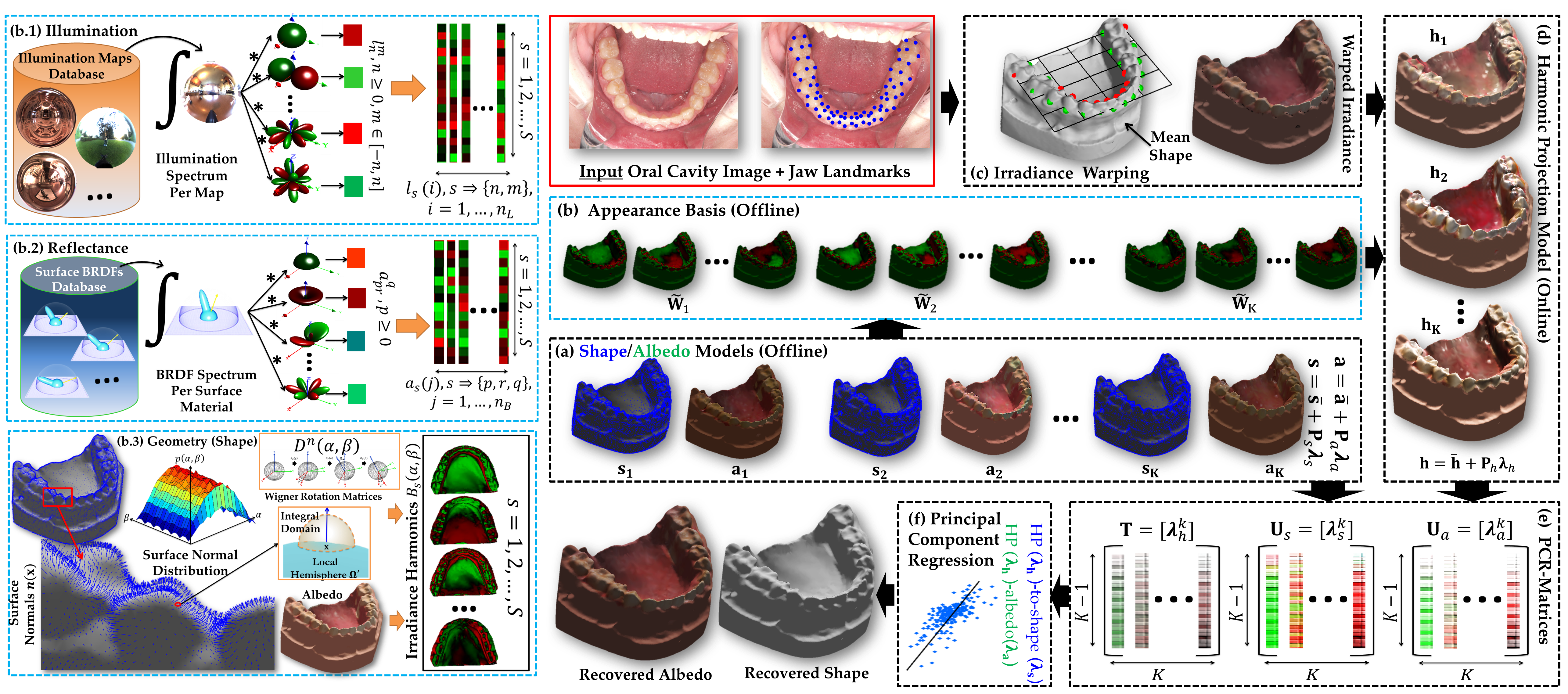

Block diagram of the proposed model-based facial shape recovery: (a) An aligned ensemble of facial shapes and albedos is used to build shape/albedo models. (b) Given the albedo and surface normals of a certain subject in the ensemble, harmonic basis images are constructed to encode reflectance properties of different skin types. Given an input image under general unknown illumination and a set of facial landmark points including both anatomical and pseudo-landmarks: (c) Dense correspondence is established between the input image and each subject in the ensemble, where each pixel position within the convex hull of a reference shape corresponds to a certain point on a sample facial scan (shape and albedo) and in the same time to a certain point on the input image. (d) The input image, in the reference frame, is projected onto the subspace spanned by the harmonic basis of each subject in the ensemble which are scaled (using the projection coefficients) and summed-up to construct the harmonic projection (HP) images. A HP image maintains the subject identity of the corresponding harmonic basis while encodes the illumination conditions and the reflectance properties of the input image. The HP images are then used to construct a harmonic projection model of the input image. (e) The inherit relation between the HP images and the corresponding shape/albedo is cast as a regression framework where the two regression models at work here, namely, HP-to-shape and HP-to-albedo models. Principal component regression is used to solve for shape and albedo coefficients to recover the shape and albedo of the input image.

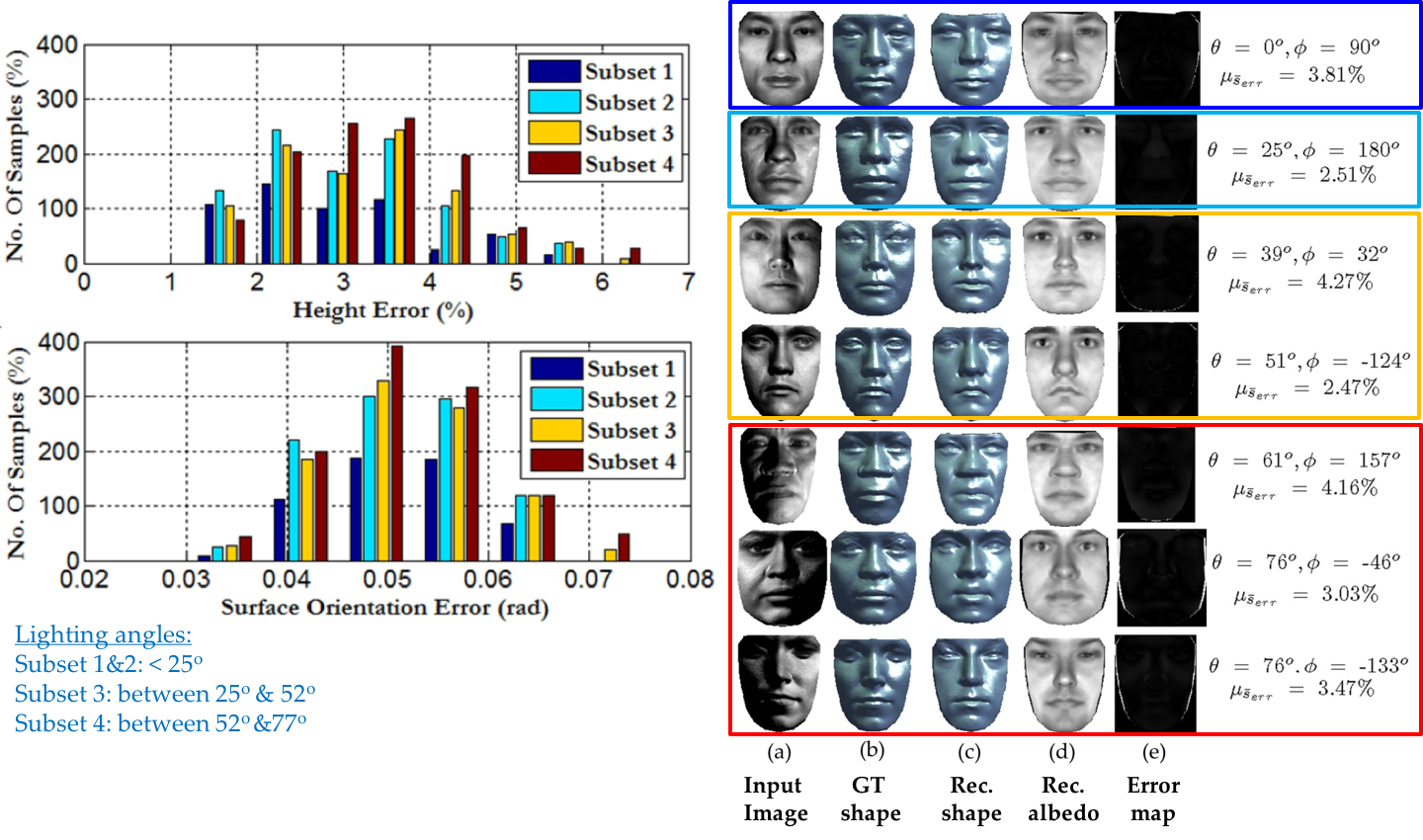

Left: Error histograms of the recovered shape for 80 out-of-training USF-samples under illumination conditions of the extended YaleB database.Right: Recovered shapes for different out-of-training USF samples, (a) Input image under directional light source of inclination angle \(\theta\) and azimuthal angle \(\phi\), (b) ground-truth shape, (c) recovered shapes, (d) recovered albedos and (e) error maps with average height error shown in percentages. It is worth mentioning that based on our earlier work in [*], the Lambertian case reported average height error of 5.4% with 0.15 rad as average surface orientation error.

[*] S. Elhabian, H. Rara, A. Ali, and A. Farag, “Illumination-invariant statistical shape recovery with contiguous occlusion,” in Eighth Canadian Conference on Computer and Robot Vision (CRV), 2011, pp. 301–308.*

Recovered shapes for different YaleB Database subjects under same illumination conditions as in the above figure, (a) Input image, (b) recovered shapes from photometric stereo using eight images from subsets 1 and 2 with known light directions, (c) recovered shapes and (d) recovered albedos.

On the medical side, a model-based shape-from-shading approach was proposed that allows for the construction of plausible human jaw models in vivo, without ionizing radiation, using fewer sample points in order to reduce the cost and intrusiveness of acquiring models of patients teeth/jaws over time. Human teeth reflectance was assumed to obey Wolff-Oren-Nayar model where it is experimentally proved that teeth surface obeys the microfacet theory. While most shape-from-shading (SFS) approaches assume known parameters of surface reflectance and point light source with known direction, this work relaxed such assumptions using the harmonic expansion of the image irradiance equation where it was feasible to incorporate prior information about natural illumination and teeth reflectance characteristics.

Block diagram of the proposed model-based human jaw shape recovery: (a) An aligned ensemble of the shapes and albedos of human jaws is used to build the 3D shape and albedo models. (b) Given the albedo and surface normals (defining the shape) of a certain jaw in the ensemble, appearance bases are constructed using Algorithm 7. Given an input oral cavity image under general unknown illumination and a set of human jaw anatomical landmark points: (c) Dense correspondence is established between the input irradiance and the mean jaw shape using 3D thin-plate splines. (d) The input image, in the reference frame, is projected onto the subspace spanned by the appearance basis of each sample in the ensemble which are scaled (using the projection coefficients) and summed-up to construct the harmonic projection (HP) irradiance which encodes the illumination and reflectance conditions of the input image. Such images are then used to construct an HP model of the input image. (e,f) The inherit relation between the HP irradiance and the corresponding shape and albedo is cast as in a regression framework where principal component regression is used to solve for shape and albedo coefficients to recover the shape and albedo of the input image.

The results demonstrated the effect of adding statistical prior as well as appearance (illumination and reflectance) modeling on the accuracy of the recovered shape. The applicability of the proposed approach to a dental application encompassing tooth restoration was further investigated.

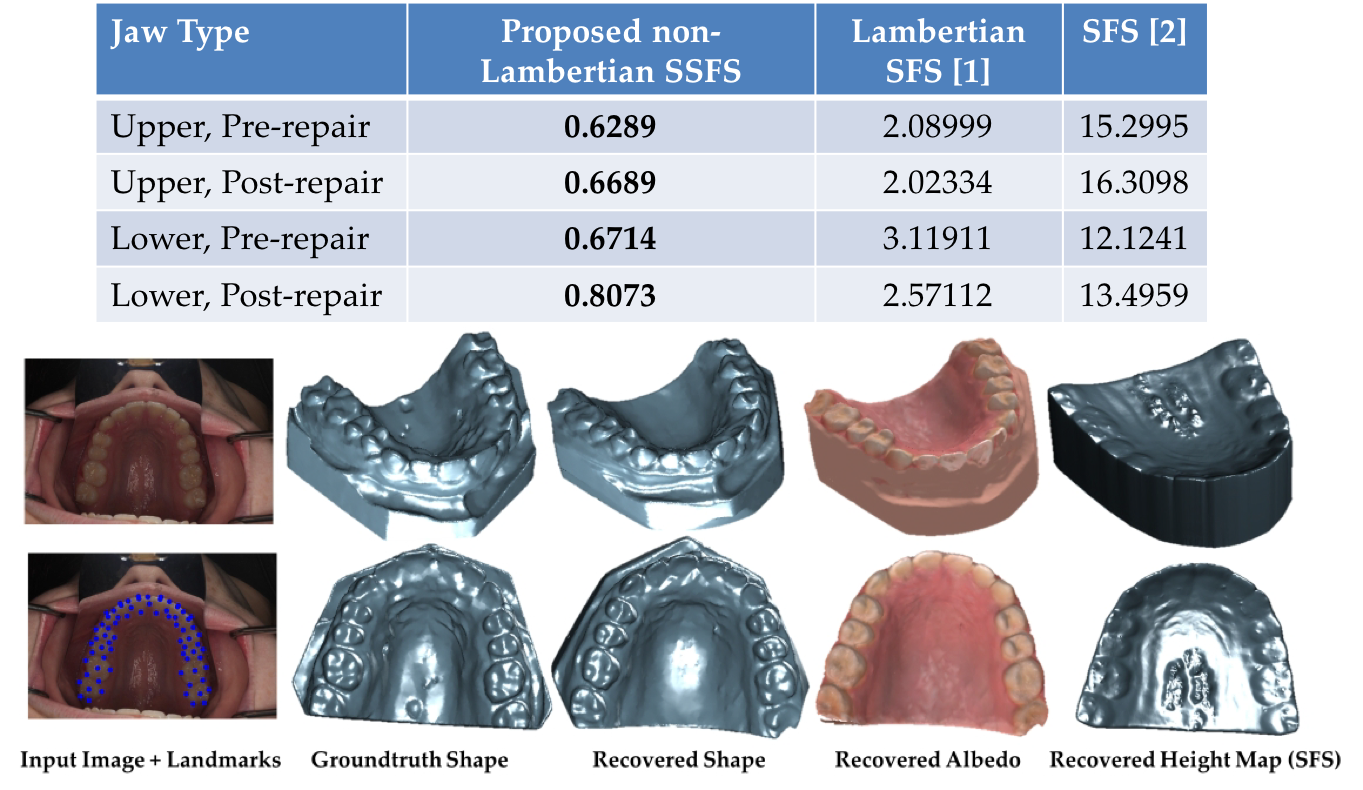

Top: Average whole jaw surface reconstruction accuracy (RMS) in mm. Bottom: Sample reconstruction result of an upper (post-repair) jaw (bottom row shows the top-view of the occlusal surface).

[1] A. Farag, S. Elhabian, A. Abdelrahim, W. Aboelmaaty, A. Farman,

and D. Tazman. Model-based human teeth shape recovery from a single

optical image with unknown illumination. In MICCAI Medical Computer

Vision Workshop (MCV), 2012.

[2] A. Ahmed and A. Farag. Shape from shading under various imaging

conditions. In Proc. of IEEE Conference on Computer Vision and Pattern

Recognition (CVPR’07), Minneapolis, MN, pages X1-X8, June 18-23,

2007.

Related publications:

Shireen Y. Elhabian and Aly A. Farag. Appearance-based Approach for Complete Human Jaw Shape Reconstruction. IET Computer Vision, 8(5), (2014): 404-418.

Shireen Y. Elhabian, Aly Abdelrahim, Aly Farag, David Tasman, Wael Aboelmaaty, Allan Farman. Clinical Crowns Shape Reconstruction - an Image-based Approach. IEEE 10th International Symposium on Biomedical Imaging (ISBI), pp. 93-96. IEEE, 2013.

Shireen Y. Elhabian, Eslam Mostafa, Ham Rara, Aly Farag. Non-Lambertian Model-based Facial Shape Recovery from Single Image Under Unknown General Illumination. Ninth Canadian Conference on Computer and Robot Vision (CRV), pp. 252-259. IEEE, 2012.

Shape from shading under near illumination and non-Lambertian reflectance

Joint work with: Aly Farag and Moumen T. El-Melegy

We proposed an accurate 3D representation of the human jaw that can be used for dental diagnostic and treatment purposes. Whereas there are several challenges, such as the non-friendly image acquisition environment inside the human mouth, problems with lighting, and errors due to the data acquisition sensors, we focused on the 3D surface reconstruction aspect for human teeth based on a single image. We introduced a more realistic formulation of the shape-from-shading (SFS) problem by considering the image formation components: the camera, the light source, and the surface reflectance. We proposed a non-Lambertian SFS algorithm under perspective projection that benefits from camera calibration parameters. We took into account the attenuation of illumination due to near-field imaging. The surface reflectance is modeled using the Oren-Nayar-Wolff model, which accounts for the retro-reflection case. Our experiments provide promising quantitative results supporting the benefits of the proposed approach.

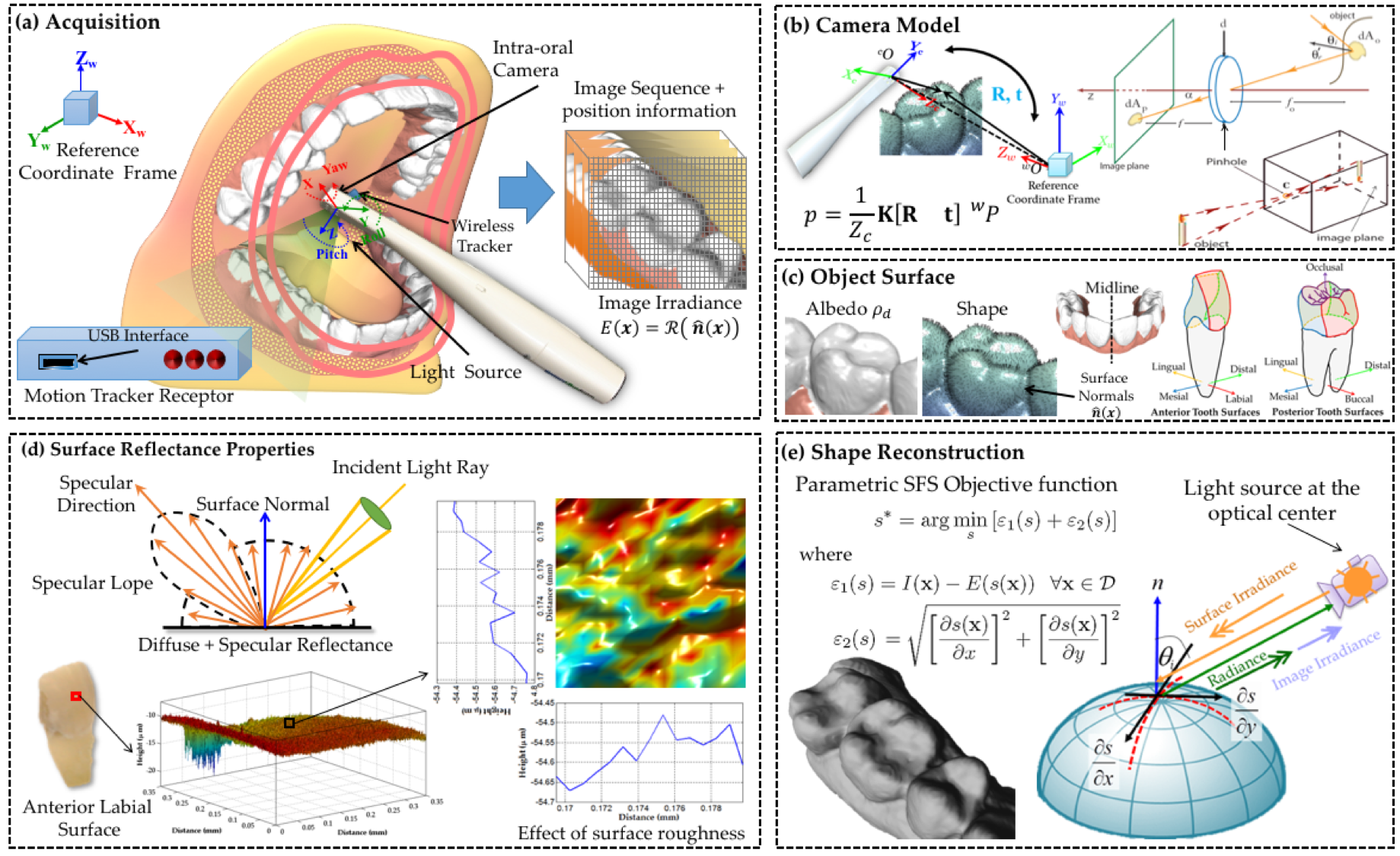

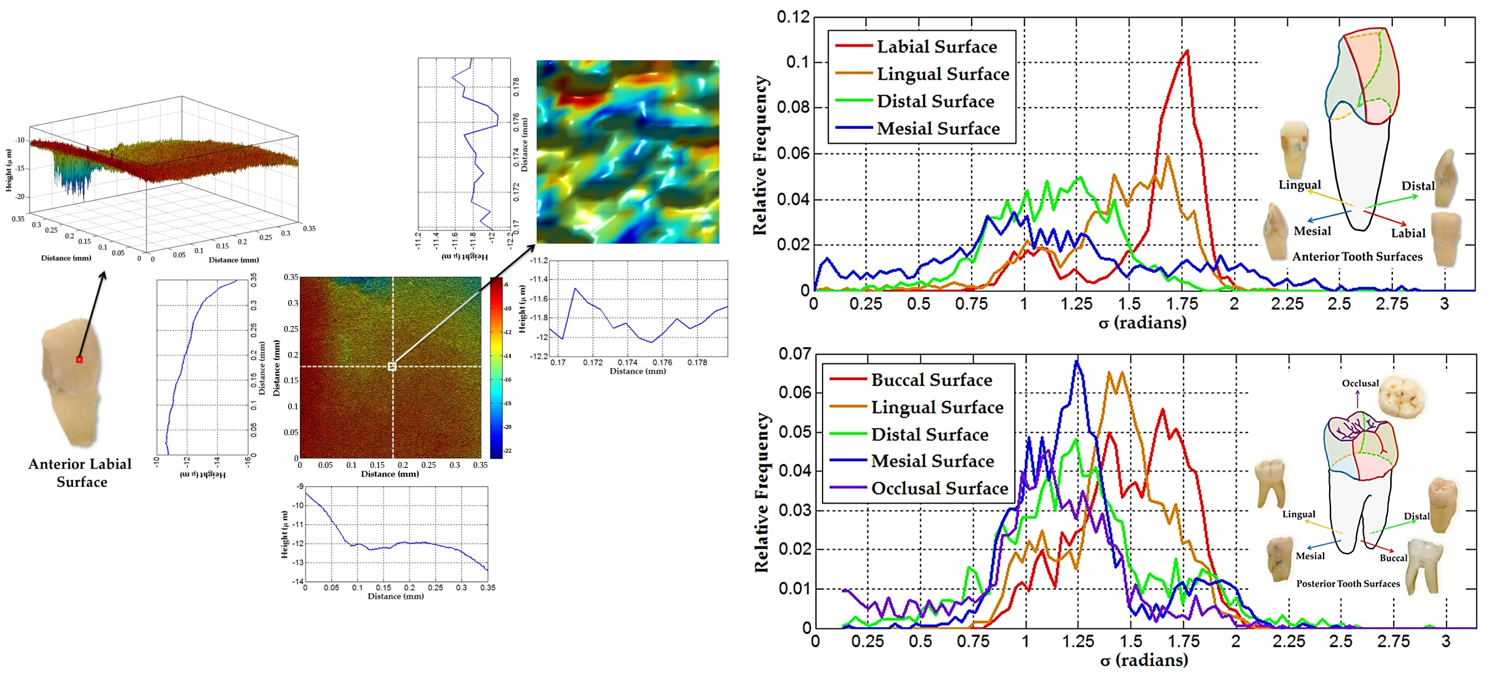

Illustration of the human jaw modelling framework: (a) A calibrated sequence of images is acquired by intraoral camera equipped by a motion tracker. (b) Pinhole camera model, with calibration and correction of image distortion. (c) Object defined by its shape (surface normal) and albedo. (d) Diffuse and a specular components of surface reflectance; specular lobe arises from the surface roughness. Surface height variations of anterior labial surface are measured by an optical surface profiler. (e) Shape reconstruction based on a single image is formulated as a shape-from-shading (SFS) problem

Left: Surface height variations of the anterior labial surface. Average horizontal and vertical surface profiles are shown on the measured area (0.35 mm\(^2\)). A zoom-in view on an area 0.01 mm\(^2\) is also shown along with its surface profiles. Right:The roughness parameter is estimated based on the measurement of microscopic height variation of a 0.35 mm\(^2\) surface patches of different surface types for incisor and molar teeth. The intra-oral camera pixel size was estimated to cover approximately 0.0075 mm\(^2\). The measured patch is divided into smaller patches with pixel size where the roughness parameter \(\sigma\) is computed. According to the distribution, the parameter tends to lie between 0.7 to 2 radians regardless the tooth surface type..

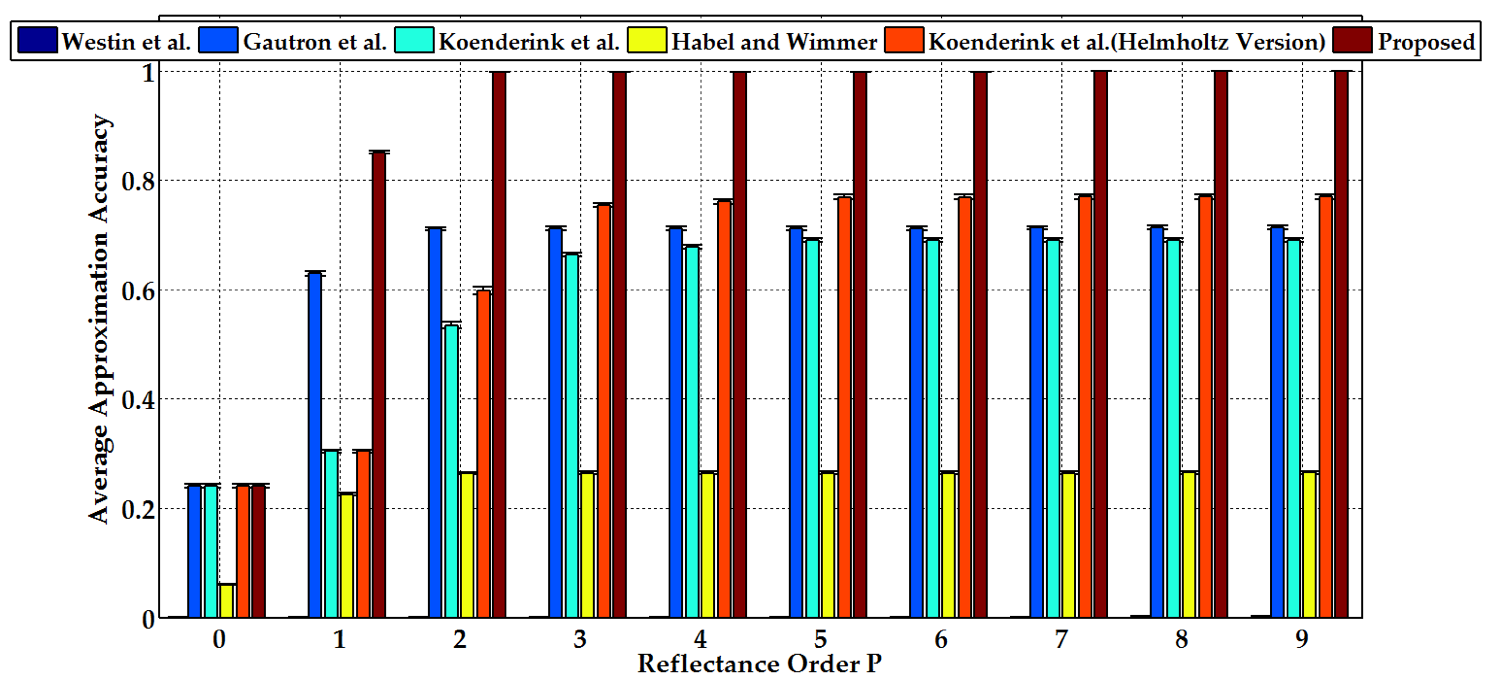

The average approximation accuracy of Wolff-Oren-Nayar reflection model under distant illumination as a function of the truncating reflectance order P. The roughness (according to the above figure) and the enamel’s refractive index (1.62 \(\pm\) 0.02 [1]) domains are uniformly sampled. The average is taken over 3750 BRDF samples where spectrum is obtained by projecting the Wolff-Oren-Nayar reflectance function using Monte Carlo integration onto the subspace spanned by the proposed isotropic basis in comparison to bases of Westin et al. [2], Gautron et al. [3], Koenderink et al. [4], Habel and Wimmer [5] and the Helmholtz basis of Koenderink et al. [4] where their isotropic version is used. Note that the proposed basis provide higher approximation accuracy at lower reflectance orders compared to other bases, hence the proposed representation is capable of providing a compact representation of reflectance functions.

[1] X-J. Wang, T. Milner, J. de Boer, Y. Zhang, D. Pashley, and J.

Nelson. Characterization of dentin and enamel by use of optical

coherence tomography. Applied Optics, 38(10):2092–2096, Apr 1999. [2] S.

Westin, J. Arvo, and K. Torrance. Predicting reflectance functions from

complex surfaces. In Proc. of SIGGRAPH, ACM Press, pages 255–264,

1992.

[3] P. Gautron, J. Krivanek, S.N. Pattanaik, and K. Bouatouch. A novel

hemispherical basis for accurate and efficient rendering. In Proceedings

of the Fifteenth Eurographics conference on Rendering Techniques, pages

321–330. Eurographics Association, 2004.

[4] J. Koenderink and A. van Doorn. Phenomenlogical description of

bidirectional surface reflection. Journal of the Optical Society of

America, 15(11):2903–2912, 1998.

[5] R. Habel and M. Wimmer. Efficient irradiance normal mapping. In

Proceedings of the 2010 ACM SIGGRAPH symposium on Interactive 3D

Graphics and Games, pages 189–195, 2010.

Related publications:

Aly S. Abdelrahim, Aly A. Farag, Shireen Y. Elhabian, Moumen T. El-Melegy. Shape-from-Shading Using Sensor and Physical Object Characteristics Applied to Human Teeth Surface Reconstruction. IET Computer Vision, 8(1), (2014): 1-15.

Aly Abdelrahim, Ahmed Shalaby, Shireen Y. Elhabian, James Graham and Aly Farag. A 3D Reconstruction of the Human Jaw from a Single Image. 20th IEEE International Conference of Image Processing (ICIP), pp. 3622-3626, 2013.

Aly Abdelrahim, Aly Farag, Shireen Y. Elhabian, Eslam Mostafa and Wael Aboelmaaty. Occlusal Surface Reconstruction of Human Teeth from a Single Image Based on Object and Sensor Physical Characteristics. In Image Processing (ICIP), 2012 19th IEEE International Conference on, pp. 1793-1796. IEEE, 2012.

Copyright © 2022 Shireen Y. Elhabian. All rights reserved.