SCI Publications

2011

P.K. Jimack, R.M. Kirby.

“Towards the Development on an h-p-Refinement Strategy Based Upon Error Estimate Sensitivity,” In Computers and Fluids, Vol. 46, No. 1, pp. 277--281. 2011.

The use of (a posteriori) error estimates is a fundamental tool in the application of adaptive numerical methods across a range of fluid flow problems. Such estimates are incomplete however, in that they do not necessarily indicate where to refine in order to achieve the most impact on the error, nor what type of refinement (for example h-refinement or p-refinement) will be best. This paper extends preliminary work of the authors (Comm Comp Phys, 2010;7:631–8), which uses adjoint-based sensitivity estimates in order to address these questions, to include application with p-refinement to arbitrary order and the use of practical a posteriori estimates. Results are presented which demonstrate that the proposed approach can guide both the h-refinement and the p-refinement processes, to yield improvements in the adaptive strategy compared to the use of more orthodox criteria.

R.M. Kirby, B. Cockburn, S.J. Sherwin.

“To CG or to HDG: A Comparative Study,” In Journal of Scientific Computing, Note: published online, 2011.

DOI: 10.1007/s10915-011-9501-7

Hybridization through the border of the elements (hybrid unknowns) combined with a Schur complement procedure (often called static condensation in the context of continuous Galerkin linear elasticity computations) has in various forms been advocated in the mathematical and engineering literature as a means of accomplishing domain decomposition, of obtaining increased accuracy and convergence results, and of algorithm optimization. Recent work on the hybridization of mixed methods, and in particular of the discontinuous Galerkin (DG) method, holds the promise of capitalizing on the three aforementioned properties; in particular, of generating a numerical scheme that is discontinuous in both the primary and flux variables, is locally conservative, and is computationally competitive with traditional continuous Galerkin (CG) approaches. In this paper we present both implementation and optimization strategies for the Hybridizable Discontinuous Galerkin (HDG) method applied to two dimensional elliptic operators. We implement our HDG approach within a spectral/hp element framework so that comparisons can be done between HDG and the traditional CG approach.

We demonstrate that the HDG approach generates a global trace space system for the unknown that although larger in rank than the traditional static condensation system in CG, has significantly smaller bandwidth at moderate polynomial orders. We show that if one ignores set-up costs, above approximately fourth-degree polynomial expansions on triangles and quadrilaterals the HDG method can be made to be as efficient as the CG approach, making it competitive for time-dependent problems even before taking into consideration other properties of DG schemes such as their superconvergence properties and their ability to handle hp-adaptivity.

G. Li, R. Palmer, M. DeLisi, G. Gopalakrishnan, R.M. Kirby.

“Formal Specification of MPI 2.0: Case Study in Specifying a Practical Concurrent Programming API,” In Science of Computer Programming, Vol. 76, pp. 65--81. 2011.

DOI: 10.1016/j.scico.2010.03.007

We describe the first formal specification of a non-trivial subset of MPI, the dominant communication API in high performance computing. Engineering a formal specification for a non-trivial concurrency API requires the right combination of rigor, executability, and traceability, while also serving as a smooth elaboration of a pre-existing informal specification. It also requires the modularization of reusable specification components to keep the length of the specification in check. Long-lived APIs such as MPI are not usually 'textbook minimalistic' because they support a diverse array of applications, a diverse community of users, and have efficient implementations over decades of computing hardware. We choose the TLA+ notation to write our specifications, and describe how we organized the specification of around 200 of the 300 MPI 2.0 functions. We detail a handful of these functions in this paper, and assess our specification with respect to the aforementioned requirements. We close with a description of possible approaches that may help render the act of writing, understanding, and validating the specifications of concurrency APIs much more productive.

T. Martin, E. Cohen, R.M. Kirby.

“Direct Isosurface Visualization of Hex-Based High-Order Geometry and Attribute Representations,” In IEEE Transactions on Visualization and Computer Graphics (TVCG), Vol. PP, No. 99, pp. 1--14. 2011.

ISSN: 1077-2626

DOI: 10.1109/TVCG.2011.103

In this paper, we present a novel isosurface visualization technique that guarantees the accuarate visualization of isosurfaces with complex attribute data defined on (un-)structured (curvi-)linear hexahedral grids. Isosurfaces of high-order hexahedralbased finite element solutions on both uniform grids (including MRI and CT scans) and more complex geometry represent a domain of interest that can be rendered using our algorithm. Additionally, our technique can be used to directly visualize solutions and attributes in isogeometric analysis, an area based on trivariate high-order NURBS (Non-Uniform Rational B-splines) geometry and attribute representations for the analysis. Furthermore, our technique can be used to visualize isosurfaces of algebraic functions. Our approach combines subdivision and numerical root-finding to form a robust and efficient isosurface visualization algorithm that does not miss surface features, while finding all intersections between a view frustum and desired isosurfaces. This allows the use of view-independent transparency in the rendering process. We demonstrate our technique through a straightforward CPU implementation on both complexstructured and complex-unstructured geometry with high-order simulation solutions, isosurfaces of medical data sets, and isosurfaces of algebraic functions.

H. Mirzaee, Liangyue, J.K. Ryan, R.M. Kirby.

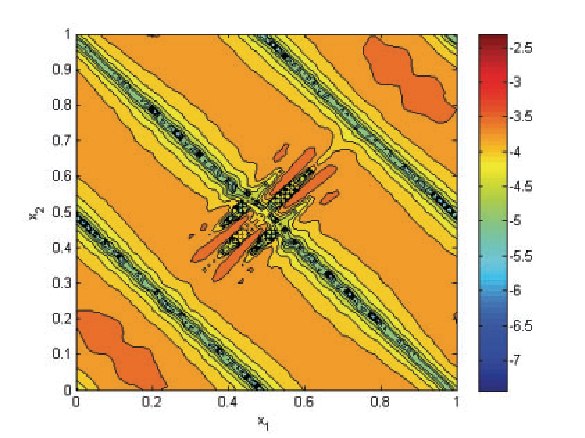

“Smoothness-Increasing Accuracy-Conserving (SIAC) Postprocessing for Discontinuous Galerkin Solutions Over Structured Triangular Meshes,” In SIAM Journal of Numerical Analysis, Vol. 49, No. 5, pp. 1899--1920. 2011.

Theoretically and computationally, it is possible to demonstrate that the order of accuracy of a discontinuous Galerkin (DG) solution for linear hyperbolic equations can be improved from order k+1 to 2k+1 through the use of smoothness-increasing accuracy-conserving (SIAC) filtering. However, it is a computationally complex task to perform this in an efficient manner, which becomes an even greater issue considering nonquadrilateral mesh structures. In this paper, we present an extension of this SIAC filter to structured triangular meshes. The basic theoretical assumption in the previous implementations of the postprocessor limits the use to numerical solutions solved over a quadrilateral mesh. However, this assumption is restrictive, which in turn complicates the application of this postprocessing technique to general tessellations. Additionally, moving from quadrilateral meshes to triangulated ones introduces more complexity in the calculations as the number of integrations required increases. In this paper, we extend the current theoretical results to variable coefficient hyperbolic equations over structured triangular meshes and demonstrate the effectiveness of the application of this postprocessor to structured triangular meshes as well as exploring the effect of using inexact quadrature. We show that there is a direct theoretical extension to structured triangular meshes for hyperbolic equations with bounded variable coefficients. This is a challenging first step toward implementing SIAC filters for unstructured tessellations. We show that by using the usual B-spline implementation, we are able to improve on the order of accuracy as well as decrease the magnitude of the errors. These results are valid regardless of whether exact or inexact integration is used. The results here demonstrate that it is still possible, both theoretically and computationally, to improve to 2k+1 over the DG solution itself for structured triangular meshes.

H. Mirzaee, J.K. Ryan, R.M. Kirby.

“Efficient Implementation of Smoothness-Increasing Accuracy-Conserving (SIAC) Filters for Discontinuous Galerkin Solutions,” In Journal of Scientific Computing, pp. (in press). 2011.

DOI: 10.1007/s10915-011-9535-x

The discontinuous Galerkin (DG) methods provide a high-order extension of the finite volume method in much the same way as high-order or spectral/hp elements extend standard finite elements. However, lack of inter-element continuity is often contrary to the smoothness assumptions upon which many post-processing algorithms such as those used in visualization are based. Smoothness-increasing accuracy-conserving (SIAC) filters were proposed as a means of ameliorating the challenges introduced by the lack of regularity at element interfaces by eliminating the discontinuity between elements in a way that is consistent with the DG methodology; in particular, high-order accuracy is preserved and in many cases increased. The goal of this paper is to explicitly define the steps to efficient computation of this filtering technique as applied to both structured triangular and quadrilateral meshes. Furthermore, as the SIAC filter is a good candidate for parallelization, we provide, for the first time, results that confirm anticipated performance scaling when parallelized on a shared-memory multi-processor machine.

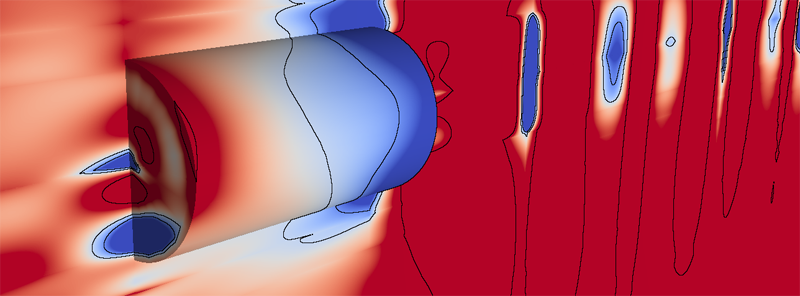

B. Nelson, R. Haimes, R.M. Kirby.

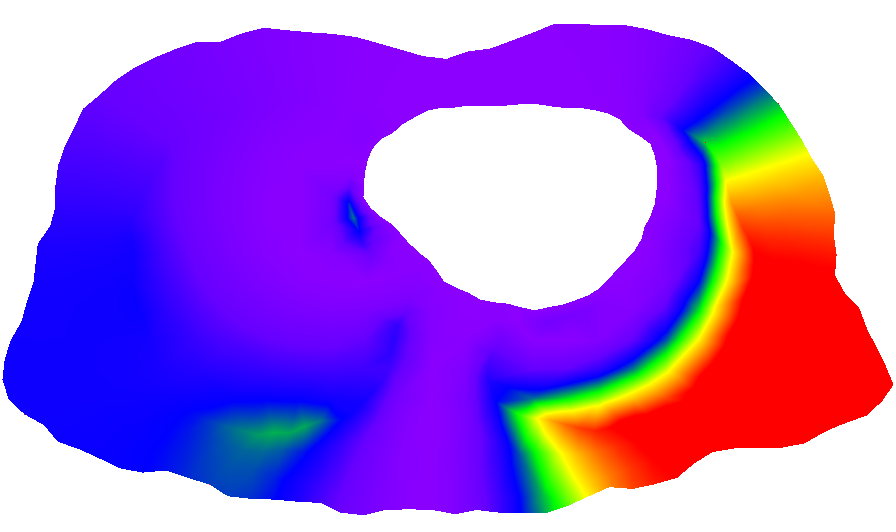

“GPU-Based Interactive Cut-Surface Extraction From High-0rder Finite Element Fields,” In IEEE Transactions on Visualization and Computer Graphics (IEEE Visualization Issue), Vol. 17, No. 12, pp. 1803--1811. 2011.

We present a GPU-based ray-tracing system for the accurate and interactive visualization of cut-surfaces through 3D simulations of physical processes created from spectral/hp high-order finite element methods. When used by the numerical analyst to debug the solver, the ability for the imagery to precisely reflect the data is critical. In practice, the investigator interactively selects from a palette of visualization tools to construct a scene that can answer a query of the data. This is effective as long as the implicit contract of image quality between the individual and the visualization system is upheld. OpenGL rendering of scientific visualizations has worked remarkably well for exploratory visualization for most solver results. This is due to the consistency between the use of first-order representations in the simulation and the linear assumptions inherent in OpenGL (planar fragments and color-space interpolation). Unfortunately, the contract is broken when the solver discretization is of higher-order. There have been attempts to mitigate this through the use of spatial adaptation and/or texture mapping. These methods do a better job of approximating what the imagery should be but are not exact and tend to be view-dependent. This paper introduces new rendering mechanisms that specifically deal with the kinds of native data generated by high-order finite element solvers. The exploratory visualization tools are reassessed and cast in this system with the focus on image accuracy. This is accomplished in a GPU setting to ensure interactivity.

D.J. Swenson, S.E. Geneser, J.G. Stinstra, R.M. Kirby, R.S. MacLeod.

“Cardiac Position Sensitivity Study in the Electrocardiographic Forward Problem Using Stochastic Collocation and Boundary Element Methods,” In Annals of Biomedical Engineering, Vol. 39, No. 12, pp. 2900--2910. 2011.

DOI: 10.1007/s10439-011-0391-5

PubMed ID: 21909818

PubMed Central ID: PMC336204

The electrocardiogram (ECG) is ubiquitously employed as a diagnostic and monitoring tool for patients experiencing cardiac distress and/or disease. It is widely known that changes in heart position resulting from, for example, posture of the patient (sitting, standing, lying) and respiration significantly affect the body-surface potentials; however, few studies have quantitatively and systematically evaluated the effects of heart displacement on the ECG. The goal of this study was to evaluate the impact of positional changes of the heart on the ECG in the specific clinical setting of myocardial ischemia. To carry out the necessary comprehensive sensitivity analysis, we applied a relatively novel and highly efficient statistical approach, the generalized polynomial chaos-stochastic collocation method, to a boundary element formulation of the electrocardiographic forward problem, and we drove these simulations with measured epicardial potentials from whole-heart experiments. Results of the analysis identified regions on the body-surface where the potentials were especially sensitive to realistic heart motion. The standard deviation (STD) of ST-segment voltage changes caused by the apex of a normal heart, swinging forward and backward or side-to-side was approximately 0.2 mV. Variations were even larger, 0.3 mV, for a heart exhibiting elevated ischemic potentials. These variations could be large enough to mask or to mimic signs of ischemia in the ECG. Our results suggest possible modifications to ECG protocols that could reduce the diagnostic error related to postural changes in patients possibly suffering from myocardial ischemia.

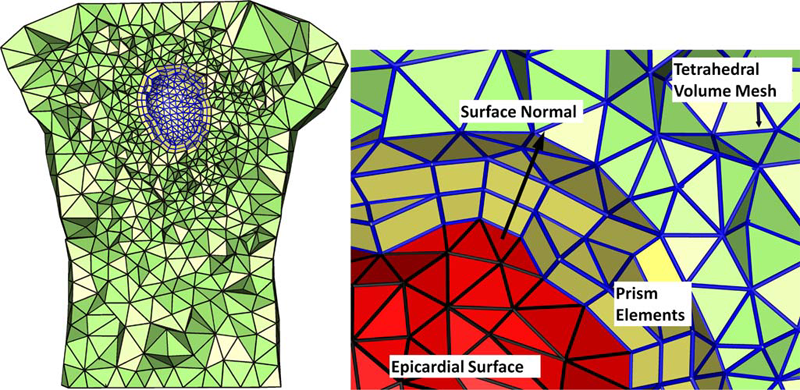

D. Wang, R.M. Kirby, C.R. Johnson.

“Finite Element Based Discretization and Regularization Strategies for 3D Inverse Electrocardiography,” In IEEE Transactions for Biomedical Engineering, Vol. 58, No. 6, pp. 1827--1838. 2011.

PubMed ID: 21382763

PubMed Central ID: PMC3109267

We consider the inverse electrocardiographic problem of computing epicardial potentials from a body-surface potential map. We study how to improve numerical approximation of the inverse problem when the finite-element method is used. Being ill-posed, the inverse problem requires different discretization strategies from its corresponding forward problem. We propose refinement guidelines that specifically address the ill-posedness of the problem. The resulting guidelines necessitate the use of hybrid finite elements composed of tetrahedra and prism elements. Also, in order to maintain consistent numerical quality when the inverse problem is discretized into different scales, we propose a new family of regularizers using the variational principle underlying finite-element methods. These variational-formed regularizers serve as an alternative to the traditional Tikhonov regularizers, but preserves the L2 norm and thereby achieves consistent regularization in multiscale simulations. The variational formulation also enables a simple construction of the discrete gradient operator over irregular meshes, which is difficult to define in traditional discretization schemes. We validated our hybrid element technique and the variational regularizers by simulations on a realistic 3-D torso/heart model with empirical heart data. Results show that discretization based on our proposed strategies mitigates the ill-conditioning and improves the inverse solution, and that the variational formulation may benefit a broader range of potential-based bioelectric problems.

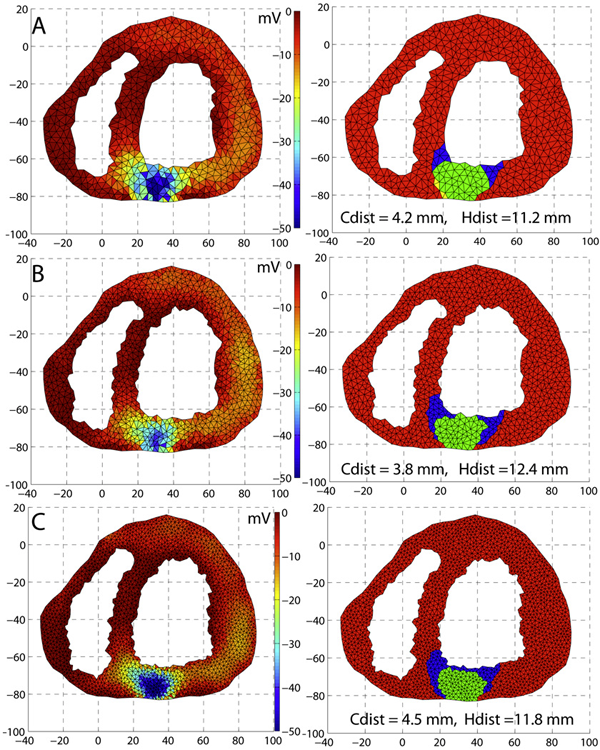

D. Wang, R.M. Kirby, R.S. Macleod, C.R. Johnson.

“An optimization framework for inversely estimating myocardial transmembrane potentials and localizing ischemia,” In Proceedings of the International Conference of the IEEE Engineering in Medicine and Biology Society (EMBS), pp. 1680--1683. 2011.

DOI: 10.1109/IEMBS.2011.6090483

PubMed ID: 22254648

PubMed Central ID: PMC3336368

By combining a static bidomain heart model with a torso conduction model, we studied the inverse electrocardiographic problem of computing the transmembrane potentials (TMPs) throughout the myocardium from a body-surface potential map, and then used the recovered potentials to localize myocardial ischemia. Our main contribution is solving the inverse problem within a constrained optimization framework, which is a generalization of previous methods for calculating transmembrane potentials. The framework offers ample flexibility for users to apply various physiologically-based constraints, and is well supported by mature algorithms and solvers developed by the optimization community. By avoiding the traditional inverse ECG approach of building the lead-field matrix, the framework greatly reduces computation cost and, by setting the associated forward problem as a constraint, the framework enables one to flexibly set individualized resolutions for each physical variable, a desirable feature for balancing model accuracy, ill-conditioning and computation tractability. Although the task of computing myocardial TMPs at an arbitrary time instance remains an open problem, we showed that it is possible to obtain TMPs with moderate accuracy during the ST segment by assuming all cardiac cells are at the plateau phase. Moreover, the calculated TMPs yielded a good estimate of ischemic regions, which was of more clinical interest than the voltage values themselves. We conducted finite element simulations of a phantom experiment over a 2D torso model with synthetic ischemic data. Preliminary results indicated that our approach is feasible and suitably accurate for the common case of transmural myocardial ischemia.

C. Yang, D. Xiu, R.M. Kirby.

“Visualization of Covariance and Cross-covariance Field,” In International Journal for Uncertainty Quantification, Vol. 3, No. 1, pp. 25--38. 2011.

DOI: 10.1615/Int.J.UncertaintyQuantification.2011003369

We present a numerical technique to visualize covariance and cross-covariance fields of a stochastic simulation. The method is local in the sense that it demonstrates the covariance structure of the solution at a point with its neighboring locations. When coupled with an efficient stochastic simulation solver, our framework allows one to effectively concurrently visualize both the mean and (cross-)covariance information for two-dimensional (spatial) simulation results. Most importantly, the visualization provides the scientist a means to identify interesting correlation structure of the solution field. The mathematical setup is discussed, along with several examples to demonstrate the efficacy of this approach.

Keywords: netl

2010

T. Etiene, L.G. Nonato, C.E. Scheidegger, J. Tierny, T.J. Peters, V. Pascucci, R.M. Kirby, C.T. Silva.

“Topology Verification for Isosurface Extraction,” SCI Technical Report, No. UUSCI-2010-003, SCI Institute, University of Utah, 2010.

S.E. Geneser, J.D. Hinkle, R.M. Kirby, Brian Wang, B. Salter, S. Joshi.

“Quantifying Variability in Radiation Dose Due to Respiratory-Induced Tumor Motion,” In Medical Image Analysis, Vol. 15, No. 4, pp. 640--649. 2010.

DOI: 10.1016/j.media.2010.07.003

P.K. Jimack, R.M. Kirby.

“Towards the Development on an h-p-Refinement Strategy Based Upon Error Estimate Sensitivity,” In Computers and Fluids, Vol. 46, No. 1, pp. 277--281. 2010.

DOI: 10.1016/j.compfluid.2010.08.003

The use of (a posteriori) error estimates is a fundamental tool in the application of adaptive numerical methods across a range of fluid flow problems. Such estimates are incomplete however, in that they do not necessarily indicate where to refine in order to achieve the most impact on the error, nor what type of refinement (for example h-refinement or p-refinement) will be best. This paper extends preliminary work of the authors (Comm Comp Phys, 2010;7:631–8), which uses adjoint-based sensitivity estimates in order to address these questions, to include application with p-refinement to arbitrary order and the use of practical a posteriori estimates. Results are presented which demonstrate that the proposed approach can guide both the h-refinement and the p-refinement processes, to yield improvements in the adaptive strategy compared to the use of more orthodox criteria.

H. Mirzaee, J.K. Ryan, R.M. Kirby.

“Quantificiation of Errors Introduced in the Numerical Approximation and Implementation of Smoothness-Increasing Accuracy Conserving (SIAC) Filtering of Discontinuous Galerkin (DG) Fields,” In Journal of Scientific Computing, Vol. 45, pp. 447-470. 2010.

M. Steffen, R.M. Kirby, M. Berzins.

“Decoupling and Balancing of Space and Time Errors in the Material Point Method (MPM),” In International Journal for Numerical Methods in Engineering, Vol. 82, No. 10, pp. 1207--1243. 2010.

P.E.J. Vos, S.J. Sherwin, R.M. Kirby.

“h-p Efficiently: Implementing Finite and Spectral/hp Element Methods to Achieve Optimal Performance for Low- and High-Order Discretisations,” In Journal of Computational Physics, Vol. 229, No. 13, pp. 5161--5181. 2010.

D.F. Wang, R.M. Kirby, C.R. Johnson.

“Resolution Strategies for the Finite-Element-Based Solution of the ECG Inverse Problem,” In IEEE Transactions on Biomedical Engineering, Vol. 57, No. 2, pp. 220--237. February, 2010.

D.F. Wang, R.M. Kirby, R.S. MacLeod, C.R. Johnson.

“A New Family of Variational-Form-Based Regularizers for Reconstructing Epicardial Potentials from Body-Surface Mapping,” In Computing in Cardiology, 2010, pp. 93--96. 2010.

2009

S.E. Geneser, R.M. Kirby, Brian Wang, B. Salter, S. Joshi.

“Incorporating patient breathing variability into a stochastic model of dose deposition for stereotactic body radiation therapy,” In Information Processing in Medical Imaging, Lecture Notes in Computer Science LNCS, Vol. 5636, pp. 688--700. 2009.

PubMed ID: 19694304